For decades, Java’s concurrency model has been a cornerstone of its power, enabling developers to build robust, scalable, and high-performance applications. However, the traditional thread-per-request model, built upon heavyweight operating system (OS) threads, has long presented challenges in the face of modern, massively concurrent workloads. The overhead of creating and managing thousands of OS threads often leads to resource exhaustion and complex, reactive programming models as a workaround. This is where the latest Java concurrency news changes the game entirely.

Driven by the groundbreaking work of Project Loom, recent Java releases, particularly the Long-Term Support (LTS) version Java 21, have introduced a revolutionary set of features that redefine how we write concurrent code. This article delves into these transformative additions—Virtual Threads, Structured Concurrency, and Scoped Values. We will explore their core concepts, provide practical code examples, and discuss their profound impact on the entire Java ecosystem news, from the core JVM to frameworks like Spring Boot.

The Rise of Virtual Threads: Lightweight Concurrency Unleashed

The most significant development in modern Java concurrency is the introduction of virtual threads. They represent a fundamental shift from the traditional model, offering a path to massive scalability with minimal changes to existing code styles.

What are Virtual Threads?

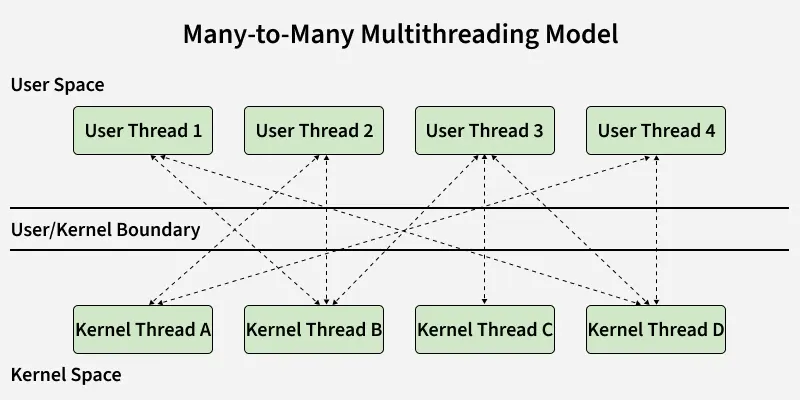

A virtual thread is a lightweight thread managed by the Java Virtual Machine (JVM) rather than the underlying operating system. While a traditional platform thread is a thin wrapper around an OS thread, a single platform thread (called a carrier thread) can run many different virtual threads over its lifetime. When a virtual thread executes a blocking I/O operation (like a network call or database query), the JVM automatically unmounts it from its carrier thread and mounts a different, runnable virtual thread. The carrier thread is never blocked, allowing it to execute other tasks.

This mechanism is the key to their efficiency. You can have millions of virtual threads in a single application without exhausting system resources, as they consume very little memory and their context-switching is managed entirely within the JVM. This is a major piece of Java performance news and a core update from OpenJDK news that directly addresses the scalability limitations of the past.

Creating and Using Virtual Threads

The API for working with virtual threads is intentionally familiar. The most recommended way to manage them is through an `ExecutorService`, which ensures clean lifecycle management. Instead of a traditional thread pool, you use `Executors.newVirtualThreadPerTaskExecutor()`. This factory method creates a new virtual thread for each submitted task, avoiding the need for pooling.

Consider a simple web server that needs to handle many concurrent I/O-bound requests. Using a virtual thread per task executor allows it to scale effortlessly.

import java.time.Duration;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.stream.IntStream;

public class VirtualThreadsDemo {

public static void main(String[] args) {

// Use a try-with-resources block to ensure the executor is closed

try (ExecutorService executor = Executors.newVirtualThreadPerTaskExecutor()) {

IntStream.range(0, 10_000).forEach(i -> {

executor.submit(() -> {

// Simulate a blocking I/O operation, like a database call or API request

try {

Thread.sleep(Duration.ofSeconds(1));

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

System.out.println("Task " + i + " completed on thread: " + Thread.currentThread());

});

});

} // The executor.close() is called automatically, waiting for tasks to finish

}

}

In this example, we submit 10,000 tasks that each block for one second. With traditional platform threads, this would require 10,000 OS threads, crashing most systems. With virtual threads, this code runs efficiently using only a small number of carrier platform threads, showcasing a massive leap in throughput for I/O-bound workloads.

Taming Concurrency with Structured Concurrency

While virtual threads solve the “how many” problem of concurrency, structured concurrency addresses the “how to manage” problem. It introduces a new model for managing the lifecycle and reliability of concurrent operations.

The Problem with Unstructured Concurrency

For years, Java developers have relied on tools like `Future` and `CompletableFuture`. While powerful, they lead to what is now called “unstructured concurrency.” When you fire off multiple asynchronous tasks, their relationship to the parent thread is detached. This makes error handling, cancellation, and reasoning about the program’s flow incredibly difficult. If a parent thread is cancelled, its child tasks may continue running in the background, leading to resource leaks. If one of several parallel tasks fails, coordinating the cancellation of the others requires complex and error-prone boilerplate code.

Introducing StructuredTaskScope

Structured Concurrency, an incubating feature in recent Java releases, treats a group of related concurrent tasks as a single unit of work. The core API is `StructuredTaskScope`. The lifecycle of the tasks is confined to a specific lexical scope (a `try-with-resources` block). When the block exits, all forked tasks are guaranteed to have completed, been cancelled, or have failed.

This model simplifies error handling immensely. For instance, the `ShutdownOnFailure` policy automatically cancels all sibling tasks if one of them fails. This is a huge leap forward in writing reliable concurrent code and is a central topic in recent Java structured concurrency news.

Practical Example: Parallel Data Fetching

A classic use case is fetching data from multiple independent microservices to compose a single response. With `StructuredTaskScope`, this becomes clean and robust.

import java.util.concurrent.Future;

import java.util.concurrent.StructuredTaskScope;

import java.time.Instant;

public class StructuredConcurrencyDemo {

// A record to hold the combined result

record UserData(String user, String orders) {}

public static void main(String[] args) throws Exception {

System.out.println("Fetching user data... " + Instant.now());

UserData data = fetchUserData();

System.out.println("Result: " + data + " at " + Instant.now());

}

static UserData fetchUserData() throws Exception {

// Create a scope that shuts down if any subtask fails

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

// Fork two concurrent tasks. Each runs in its own new virtual thread.

Future<String> userFuture = scope.fork(StructuredConcurrencyDemo::fetchUser);

Future<String> ordersFuture = scope.fork(StructuredConcurrencyDemo::fetchOrders);

// Wait for both tasks to complete or for one to fail

scope.join();

scope.throwIfFailed(); // Throws an exception if any task failed

// If we reach here, both tasks succeeded. Combine the results.

return new UserData(userFuture.resultNow(), ordersFuture.resultNow());

}

}

static String fetchUser() throws InterruptedException {

System.out.println("Fetching user details...");

Thread.sleep(2000); // Simulate network latency

System.out.println("User details fetched.");

// Uncomment the line below to simulate a failure

// throw new RuntimeException("User service unavailable");

return "User(id=123, name='Jane Doe')";

}

static String fetchOrders() throws InterruptedException {

System.out.println("Fetching user orders...");

Thread.sleep(3000); // Simulate network latency

System.out.println("User orders fetched.");

return "[Order(id=A1), Order(id=B2)]";

}

}

In this example, `fetchUser` and `fetchOrders` run in parallel. If `fetchUser` were to fail, the `ShutdownOnFailure` policy would immediately cancel the `fetchOrders` task, and `scope.throwIfFailed()` would propagate the error. The code is simple to read, and its behavior is predictable and safe by default.

Scoped Values: A Modern Alternative to Thread-Local Variables

The final piece of the modern concurrency puzzle is `ScopedValue`. It offers a robust and immutable way to share data within a thread and its children, designed specifically to work seamlessly with virtual threads.

The Limitations of ThreadLocal

`ThreadLocal` variables have long been used to pass contextual data (like user credentials or transaction IDs) down a call stack without polluting method signatures. However, they have significant drawbacks. They are mutable, which can lead to bugs. More importantly, with virtual threads, their inheritance model can cause unexpected memory retention if not managed with extreme care, as a virtual thread might be “parked” for a long time while still holding references via its thread-locals.

How Scoped Values Work

A `ScopedValue` is an immutable variable that is available for a bounded period of execution. You bind a value to a `ScopedValue` for the duration of a `run()` or `call()` method. Any code executed within that scope, including in new virtual threads forked from it, can read the value. Once the scope is exited, the binding is gone. This makes them inherently safer and more predictable than `ThreadLocal` variables.

Code in Action: Passing User Context

Imagine a web request handler that needs to make the current user’s information available to downstream business logic and data access layers.

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.Future;

public class ScopedValuesDemo {

// Define a ScopedValue to hold the authenticated user's name

private static final ScopedValue<String> LOGGED_IN_USER = ScopedValue.newInstance();

public static void main(String[] args) {

// Simulate handling two different requests for two different users

handleRequest("user-alice");

handleRequest("user-bob");

}

private static void handleRequest(String username) {

// Bind the username to the ScopedValue for the duration of this method call

ScopedValue.where(LOGGED_IN_USER, username)

.run(() -> {

System.out.println("Request handler started for: " + LOGGED_IN_USER.get());

// Call business logic which might fork other tasks

processBusinessLogic();

});

// Outside the run() block, the value is no longer available

// System.out.println("Outside scope: " + LOGGED_IN_USER.isBound()); // false

}

private static void processBusinessLogic() {

System.out.println("Business logic running for: " + LOGGED_IN_USER.get());

// The ScopedValue is automatically inherited by child threads (virtual or platform)

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

Future<?> f = executor.submit(ScopedValuesDemo::performDatabaseQuery);

f.get();

} catch (Exception e) {

e.printStackTrace();

}

}

private static void performDatabaseQuery() {

// The downstream method can access the user context without it being passed as a parameter

System.out.println("Database query for user: " + LOGGED_IN_USER.get());

}

}

This example clearly shows how `ScopedValue` provides a clean, immutable, and structured way to pass data. It eliminates “parameter drilling” and avoids the pitfalls of `ThreadLocal`, making it a perfect fit for modern, concurrent Java applications.

Best Practices and Ecosystem Impact

Adopting these new features requires understanding their intended use cases and potential pitfalls. This is crucial Java wisdom tips news for developers looking to modernize their skills.

When to Use Virtual Threads

Virtual threads excel in I/O-bound or “blocking” scenarios. This includes:

- Handling incoming requests in web servers and microservices.

- Calling downstream APIs or other microservices.

- Querying databases or message queues.

- Reading from or writing to files or network sockets.

For CPU-bound tasks (e.g., complex calculations, data transformations, image processing), you should continue to use a small, fixed pool of traditional platform threads to avoid overwhelming the CPU cores.

Avoiding Common Pitfalls

A key concept to understand is “pinning.” A virtual thread is pinned to its carrier platform thread when it executes code inside a `synchronized` block or a native method (`JNI`). While pinned, the carrier thread cannot be used to run other virtual threads, which can degrade performance and lead to thread starvation in the carrier pool.

- Best Practice: Prefer `java.util.concurrent.locks.ReentrantLock` over `synchronized` blocks in high-concurrency code paths that might be executed on virtual threads.

- Best Practice: Do not pool virtual threads. They are cheap to create. Use `Executors.newVirtualThreadPerTaskExecutor()` to create a new one for each task.

Impact on the Java Ecosystem

The introduction of Project Loom features is sending ripples across the entire Java ecosystem news landscape.

- Spring Boot News: Spring Boot 3.2 and later versions offer first-class support for virtual threads. You can enable them with a simple configuration property (`spring.threads.virtual.enabled=true`), and the framework will automatically use them for handling web requests.

– Reactive Java News: For years, reactive frameworks like Project Reactor and RxJava were the primary solution for high-throughput, non-blocking applications. While still incredibly powerful and relevant, virtual threads now offer an alternative. Developers can write simple, imperative, blocking-style code that is easier to write, read, and debug, while achieving similar performance characteristics to reactive code for many I/O-bound use cases.

Conclusion: A New Chapter for Java

The concurrency features delivered by Project Loom and standardized in recent Java releases like Java 21 mark a paradigm shift. They are not just incremental improvements; they are a fundamental rethinking of how to build scalable and maintainable applications on the JVM.

Virtual Threads democratize high-throughput concurrency, making it accessible through simple, familiar APIs. Structured Concurrency brings order and reliability to the chaos of parallel tasks, eliminating entire classes of bugs related to resource leaks and error handling. Finally, Scoped Values provide a safe, modern mechanism for sharing contextual data.

For Java developers, this is an exciting time. The barrier to writing highly concurrent applications has been significantly lowered. The next step is to start experimenting with these features in your new projects, refactor performance-critical sections of existing applications, and embrace the simpler, more efficient, and more robust style of programming that this new era of Java concurrency enables.