For decades, Java has been a cornerstone of enterprise software development, renowned for its stability, platform independence, and vast ecosystem. In the age of the cloud, its relevance has only intensified. However, the paradigm has shifted. The conversation is no longer just about building robust applications; it’s about building highly performant, resource-efficient, and cost-effective systems that can scale dynamically in cloud environments. The latest Java news isn’t just about new language features; it’s about a fundamental evolution in how Java applications are built, deployed, and managed to thrive in the cloud.

This evolution is driven by a confluence of factors: groundbreaking advancements within the JVM itself, revolutionary concurrency models like Project Loom, and the rise of intelligent cloud orchestration platforms. Developers are now empowered with tools and techniques that directly address the dual challenges of maximizing application performance while minimizing exorbitant cloud bills. This article delves into the core strategies, modern practices, and cutting-edge tools that define high-performance, cost-conscious Java development today, providing practical code examples and actionable insights for developers and architects alike.

The Foundation: Modern JVMs and Revolutionary Concurrency

The bedrock of any high-performance Java application is the Java Virtual Machine (JVM). The difference between running on an older version like Java 8 versus a modern LTS release like Java 21 is not just about new syntax; it’s about years of deep, underlying performance engineering. Staying current with Java SE news is crucial for leveraging these foundational improvements.

The Power of Modern JVMs and Garbage Collectors

Modern JVMs, available through various OpenJDK distributions like Azul Zulu, Amazon Corretto, and Adoptium Temurin, come packed with highly advanced Garbage Collectors (GCs). While the G1GC (Garbage-First Garbage Collector) has become a fantastic default, specialized GCs offer game-changing benefits for specific workloads:

- Z Garbage Collector (ZGC): A scalable, low-latency garbage collector designed for applications that require massive heaps (from gigabytes to terabytes) with pause times that do not exceed a few milliseconds. This is ideal for services with strict latency requirements.

- Shenandoah: Another ultra-low-pause-time GC that performs most of its work concurrently with the running Java threads. It’s an excellent choice for services where responsiveness is paramount.

These advancements mean less time spent in “stop-the-world” pauses and more time executing your application’s business logic, directly boosting throughput and improving user experience.

A New Era of Concurrency with Project Loom

Perhaps the most significant recent development in Java concurrency news is the official arrival of Virtual Threads with Project Loom in Java 21. For years, Java’s traditional thread-per-request model has been a bottleneck for high-throughput I/O-bound applications. Each request would block a precious, heavyweight OS thread while waiting for a database or network call. Virtual Threads solve this elegantly.

Virtual threads are lightweight threads managed by the JVM, not the OS. Millions of them can be created, allowing for a simple, synchronous coding style while achieving the scalability of asynchronous, non-blocking code. This dramatically improves resource utilization and application throughput.

Consider a simple web server task. The old way involved a limited thread pool:

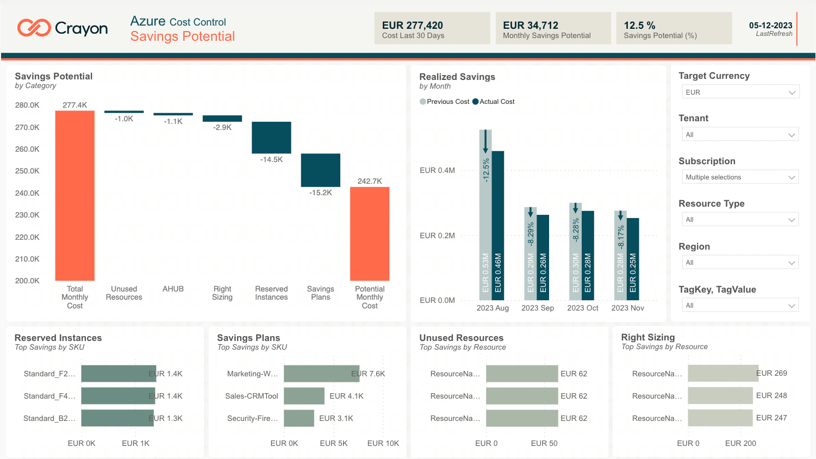

Cloud cost savings graph – Cloud Cost Control – Crayon

// Traditional approach: A thread pool with a fixed number of OS threads.

// Can become a bottleneck under heavy I/O load.

public class TraditionalConcurrency {

public static void main(String[] args) throws InterruptedException {

// A pool of 100 OS threads. If 101 tasks arrive, one has to wait.

try (var executor = Executors.newFixedThreadPool(100)) {

for (int i = 0; i < 1000; i++) {

executor.submit(() -> {

// Simulate a blocking I/O operation

try {

Thread.sleep(Duration.ofSeconds(1));

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

System.out.println("Task completed by: " + Thread.currentThread());

});

}

}

}

}With Java 21’s virtual threads, the code remains simple, but the scalability is immense:

// Modern approach with Virtual Threads (Project Loom)

// Can handle thousands of concurrent tasks with ease.

public class VirtualThreadConcurrency {

public static void main(String[] args) {

// An executor that creates a new virtual thread for each task.

// The JVM handles the mapping to a small number of OS threads.

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

for (int i = 0; i < 1000; i++) {

executor.submit(() -> {

// Simulate a blocking I/O operation

try {

Thread.sleep(Duration.ofSeconds(1));

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

// This will print something like "VirtualThread[#21]/run"

System.out.println("Task completed by: " + Thread.currentThread());

});

}

} // The executor waits for all tasks to complete upon closing.

}

}This simple change allows the application to handle thousands of concurrent blocking operations without exhausting OS threads, a monumental leap for Java performance news and a direct path to reducing the required compute resources in the cloud.

Architecting for Efficiency: Reactive Streams and Right-Sizing

Beyond the JVM, application architecture plays a pivotal role in cloud efficiency. Modern frameworks and a data-driven approach to resource allocation are essential for building lean, performant systems.

Reactive Programming for Resource Optimization

While Project Loom simplifies concurrency, the reactive programming paradigm remains a powerful tool, especially for CPU-bound or complex event-driven systems. Frameworks like Spring WebFlux, built on Project Reactor, allow developers to build non-blocking applications that handle data as asynchronous streams.

This approach ensures that threads are never blocked, maximizing CPU utilization. A reactive system can handle more load on the same hardware compared to a traditional blocking one. This is a key topic in Spring news and Jakarta EE news, as both ecosystems embrace reactive principles.

Here’s a simple reactive endpoint using Spring Boot with WebFlux. It streams a sequence of events to the client without holding a thread for the entire duration.

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

import org.springframework.http.MediaType;

import org.springframework.web.reactive.function.server.RouterFunction;

import org.springframework.web.reactive.function.server.RouterFunctions;

import org.springframework.web.reactive.function.server.ServerResponse;

import reactor.core.publisher.Flux;

import java.time.Duration;

import static org.springframework.web.reactive.function.server.RequestPredicates.GET;

@SpringBootApplication

public class ReactiveApplication {

public static void main(String[] args) {

SpringApplication.run(ReactiveApplication.class, args);

}

// A functional endpoint that streams data

@Bean

public RouterFunction<ServerResponse> route() {

return RouterFunctions.route(GET("/event-stream"), request ->

ServerResponse.ok()

.contentType(MediaType.TEXT_EVENT_STREAM)

.body(

// Flux is a reactive stream of 0..N items

Flux.interval(Duration.ofSeconds(1))

.map(sequence -> "Event #" + sequence),

String.class

)

);

}

}This service can serve a large number of concurrent clients because it doesn’t dedicate a thread to each one. This efficiency translates directly to lower cloud costs, as fewer application instances are needed to handle the same traffic.

The Critical Role of Profiling and Right-Sizing

You can’t optimize what you can’t measure. Over-provisioning resources is one of the biggest sources of wasted cloud spend. Tools like Java Flight Recorder (JFR) and Java Mission Control (JMC), which are built into the OpenJDK, are indispensable. They allow developers to profile applications under load to get a precise understanding of their CPU, memory, and I/O characteristics. This data is crucial for “right-sizing” Docker containers and Kubernetes pods, ensuring you pay only for the resources your application truly needs.

Cloud cost savings graph – OpenStack Delivers Efficiency, Cost Savings for Powering AI …

Advanced Techniques and Intelligent Tooling

The Java ecosystem is constantly innovating, incorporating intelligent tools and patterns that further enhance efficiency and developer productivity. From AI-powered libraries to better code patterns, these advancements are at the forefront of Java news.

The Rise of AI in the Java Ecosystem

The AI revolution has arrived in the Java world. Libraries like LangChain4j and the new Spring AI project make it incredibly easy to integrate Large Language Models (LLMs) into Java applications. This opens up new possibilities for building intelligent features, but it’s also relevant to optimization. For example, an AI-powered monitoring system could analyze application logs and metrics to predict performance bottlenecks or suggest cost-saving measures.

Here’s a conceptual example of using an interface-driven design with LangChain4j to create a simple chat service. This demonstrates a clean, testable approach to integrating external services.

import dev.langchain4j.model.chat.ChatLanguageModel;

import dev.langchain4j.model.openai.OpenAiChatModel;

// 1. Define a clear interface for our AI service

interface AiChatService {

String getResponse(String userQuery);

}

// 2. Create a concrete implementation using a specific provider

class OpenAiChatServiceImpl implements AiChatService {

private final ChatLanguageModel model;

public OpenAiChatServiceImpl(String apiKey) {

this.model = OpenAiChatModel.withApiKey(apiKey);

}

@Override

public String getResponse(String userQuery) {

return model.generate(userQuery);

}

}

// 3. Use the service in your application

public class AiApplication {

public static void main(String[] args) {

// The API key would come from a secure configuration

AiChatService chatService = new OpenAiChatServiceImpl("your-api-key");

String response = chatService.getResponse("What is the latest Java performance news?");

System.out.println("AI Response: " + response);

}

}Avoiding Nulls with the Null Object Pattern

Cloud cost savings graph – The degree of savings achieved using cloud computing | Download …

Performance isn’t just about speed; it’s also about resilience. `NullPointerException`s are a common source of runtime failures. The Null Object pattern is a classic design pattern that helps create more robust and cleaner code by eliminating null checks. This pattern involves creating a special “null” implementation of an interface that provides neutral, “do nothing” behavior.

This pattern, a piece of timeless Java wisdom, reduces conditional complexity and makes the code easier to reason about, which indirectly contributes to better-performing and more maintainable systems.

// 1. The common interface

interface Logger {

void log(String message);

}

// 2. The real implementation

class ConsoleLogger implements Logger {

@Override

public void log(String message) {

System.out.println("LOG: " + message);

}

}

// 3. The Null Object implementation

class NullLogger implements Logger {

@Override

public void log(String message) {

// Does nothing. No output, no error.

}

}

// 4. Client code without null checks

public class LoggingService {

private Logger logger;

public LoggingService(boolean loggingEnabled) {

if (loggingEnabled) {

this.logger = new ConsoleLogger();

} else {

// Instead of setting logger to null, we use the NullLogger.

this.logger = new NullLogger();

}

}

public void doWork() {

// No need for: if (logger != null) { ... }

logger.log("Starting work...");

// ... business logic ...

logger.log("Work finished.");

}

}Best Practices for a Modern Java World

To tie everything together, here are some essential best practices for building and maintaining high-performance, cost-effective Java applications in the cloud:

- Stay Updated: Regularly update your Java version (sticking to LTS releases like 17 or 21 is a safe bet), frameworks (Spring Boot, Jakarta EE), and build tools (Maven, Gradle). These updates often contain critical performance improvements and security patches. Keeping an eye on Maven news and Gradle news is part of modern development hygiene.

- Embrace Modern Concurrency: For I/O-bound workloads, default to `Executors.newVirtualThreadPerTaskExecutor()`. It provides massive scalability with minimal code changes.

- Profile Before You Provision: Use JFR and other profiling tools to understand your application’s resource footprint. Use this data to configure your container resources accurately.

- Write Clean, Testable Code: Use modern language features like Records and Sealed Classes to write more concise and maintainable code. A robust test suite using tools like JUnit and Mockito is non-negotiable; it catches bugs early before they become costly production incidents.

- Leverage Intelligent Orchestration: Use cloud-native platforms and tools that can automatically scale your application based on real-time demand, often leveraging AI to optimize resource allocation and utilize cheaper computing options like spot instances.

Conclusion

The Java ecosystem is more vibrant and innovative than ever. The narrative has decisively shifted towards cloud-native excellence, focusing on the symbiotic relationship between performance and cost. By leveraging modern JVMs with their advanced garbage collectors, embracing the revolutionary simplicity and power of Project Loom’s virtual threads, architecting with reactive principles, and utilizing intelligent tooling, developers can build Java applications that are not only blazingly fast but also remarkably cost-efficient.

The key takeaway for every Java developer is to remain curious and proactive. The days of “set it and forget it” are over. Continuously exploring the latest Java performance news, profiling your applications, and adopting modern best practices are the new fundamentals. By doing so, you can ensure that your Java applications fully exploit the power and elasticity of the cloud, delivering maximum value to your business while keeping operational costs firmly in check.