For decades, the scalability of server-side Java applications was inextricably linked to the operating system’s thread management. The classic “thread-per-request” model, while simple to reason about, imposed a hard ceiling on concurrency due to the heavy memory footprint and context-switching costs of OS threads. However, the landscape of Java concurrency news has shifted dramatically with the arrival of Project Loom and the official standardization of Virtual Threads in Java 21. This evolution represents one of the most significant architectural changes in Java SE news history, promising to democratize high-throughput concurrency without the cognitive load of reactive programming.

In this comprehensive guide, we will explore the mechanics of virtual threads, their integration into ecosystems like Spring Boot, and how they render complex asynchronous code into readable, sequential logic. Whether you are following OpenJDK news closely or catching up on Java 21 news, understanding virtual threads is now a critical skill for modern backend development. We will look at practical implementation, performance implications, and how this technology impacts everything from Jakarta EE news to Spring AI news.

The Paradigm Shift: From Platform to Virtual Threads

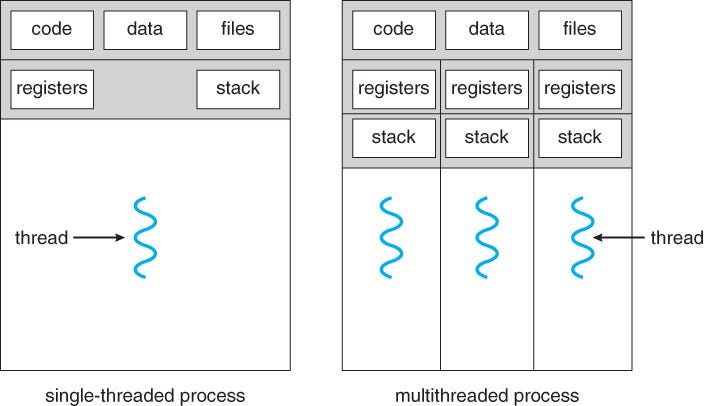

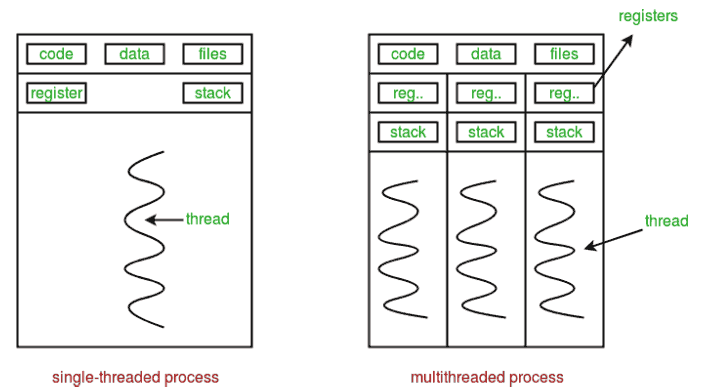

To understand the magnitude of this change, we must look at the limitations of the traditional model. Historically, a Java thread was a thin wrapper around an OS thread (often called a Platform Thread). These are expensive resources. A single OS thread might consume 1MB of stack memory outside the heap. Consequently, a JVM running 5,000 threads could easily exhaust memory, limiting the application’s ability to handle concurrent connections long before the CPU was saturated.

This limitation fueled the rise of Reactive Java news, where frameworks like RxJava and Project Reactor utilized non-blocking I/O to handle thousands of requests with few threads. While performant, reactive programming introduced “callback hell,” difficult debugging, and complex stack traces. Project Loom news promised a solution: keep the simple synchronous programming model but detach the Java thread from the OS thread.

How Virtual Threads Work

Virtual threads are user-mode threads scheduled by the JVM, not the OS. They are lightweight, with a stack size that scales dynamically and can be as small as a few hundred bytes. The JVM maps a massive number of virtual threads onto a small pool of OS threads (Carrier Threads). When a virtual thread performs a blocking I/O operation (like a database call or HTTP request), the JVM unmounts it from the carrier thread, leaving the carrier free to execute other virtual threads. This creates the illusion of blocking without the performance penalty.

Here is a fundamental example of creating and executing virtual threads compared to the traditional approach. This snippet is essential for anyone following Java self-taught news or looking to upgrade legacy code.

import java.time.Duration;

import java.util.concurrent.Executors;

import java.util.stream.IntStream;

public class VirtualThreadDemo {

public static void main(String[] args) {

long start = System.currentTimeMillis();

// Creating a Virtual Thread Executor

// This is a key feature discussed in Java 21 news

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

// Launching 10,000 tasks

IntStream.range(0, 10_000).forEach(i -> {

executor.submit(() -> {

try {

// Simulating a blocking I/O operation (e.g., DB call)

Thread.sleep(Duration.ofMillis(100));

return i;

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

});

});

} // Executor auto-closes and waits for tasks here

long end = System.currentTimeMillis();

System.out.println("Finished 10,000 tasks in " + (end - start) + "ms");

}

}In the code above, if we used standard platform threads, launching 10,000 threads would likely crash the JVM or cause significant thrashing. With virtual threads, this runs effortlessly, often completing in a time close to the duration of the longest sleep, plus minor overhead. This scalability is vital for Java ecosystem news, as it allows standard libraries to handle massive concurrency.

Implementation in Modern Frameworks

The true power of virtual threads is realized when they are integrated into frameworks like Spring Boot, Quarkus, or Helidon. Recent Spring Boot news highlights that starting with version 3.2, enabling virtual threads is often as simple as a configuration property. This shift impacts everything from Hibernate news (handling database connections) to Spring AI news (handling long-running AI model inference requests).

Configuring Spring Boot for Virtual Threads

For developers tracking Spring news, the integration eliminates the need for complex @Async configurations or reactive WebClient chains for simple I/O bound tasks. You can continue using the blocking Tomcat server, but it will now utilize virtual threads for request handling.

Below is a practical example of a Spring Boot application configuration and a controller that benefits from virtual threads. This setup is increasingly common in Java microservices news.

package com.example.virtualthreads;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.web.embedded.tomcat.TomcatProtocolHandlerCustomizer;

import org.springframework.context.annotation.Bean;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestClient;

import java.util.concurrent.Executors;

@SpringBootApplication

public class HighScaleApp {

public static void main(String[] args) {

SpringApplication.run(HighScaleApp.class, args);

}

// In Spring Boot 3.2+, you can simply use:

// spring.threads.virtual.enabled=true

// in application.properties.

// However, for older versions or custom setups, you might see this:

@Bean

public TomcatProtocolHandlerCustomizer protocolHandlerVirtualThreadExecutorCustomizer() {

return protocolHandler -> {

protocolHandler.setExecutor(Executors.newVirtualThreadPerTaskExecutor());

};

}

}

@RestController

class ExternalApiController {

private final RestClient restClient;

public ExternalApiController(RestClient.Builder builder) {

this.restClient = builder.baseUrl("https://api.example.com").build();

}

@GetMapping("/fetch-data")

public String fetchData() {

// This looks like blocking code.

// On a Platform thread, this blocks the OS thread.

// On a Virtual thread, the carrier is released immediately.

String response = restClient.get()

.uri("/slow-endpoint")

.retrieve()

.body(String.class);

return "Processed: " + response;

}

}This simplicity is deceptive. Under the hood, when restClient waits for the response, the virtual thread yields. This allows a single instance to handle tens of thousands of concurrent requests, a feat previously reserved for non-blocking frameworks like Netty (used in WebFlux). This is huge for Java EE news and legacy migration projects.

Advanced Techniques: Structured Concurrency

Virtual threads are just the foundation. The accompanying feature, often discussed in Java structured concurrency news, is the API for coordinating these threads. Structured Concurrency treats multiple tasks running in different threads as a single unit of work. This simplifies error handling and cancellation, addressing common pain points found in Java concurrency news.

If you are following Maven news or Gradle news, ensure your build tools are configured to enable preview features if you are using a Java version where Structured Concurrency is still incubating (e.g., Java 21). This pattern is essential for orchestrating complex workflows, such as those found in LangChain4j news where multiple LLM calls might occur in parallel.

Using StructuredTaskScope

The StructuredTaskScope API ensures that if one subtask fails, others can be cancelled automatically, preventing thread leaks—a common issue in older Java 8 news era concurrency models.

import java.util.concurrent.StructuredTaskScope;

import java.util.concurrent.ExecutionException;

import java.util.function.Supplier;

public class StructuredConcurrencyDemo {

record UserData(String profile, String preferences) {}

public UserData fetchUserData(String userId) throws InterruptedException, ExecutionException {

// Ensure --enable-preview is set in your JVM arguments

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

// Forking tasks virtually

Supplier profileTask = scope.fork(() -> fetchUserProfile(userId));

Supplier prefsTask = scope.fork(() -> fetchUserPreferences(userId));

// Wait for all to finish or the first failure

scope.join();

scope.throwIfFailed();

// Combine results safely

return new UserData(profileTask.get(), prefsTask.get());

}

}

private String fetchUserProfile(String id) throws InterruptedException {

Thread.sleep(100); // Simulate DB latency

return "Profile-" + id;

}

private String fetchUserPreferences(String id) throws InterruptedException {

Thread.sleep(150); // Simulate API latency

return "Prefs-" + id;

}

} This pattern is significantly cleaner than nesting CompletableFuture callbacks. It aligns with the philosophy of Java wisdom tips news: code should read top-to-bottom. It also plays well with testing libraries; checking JUnit news and Mockito news reveals increasing support for testing virtual thread scopes.

Best Practices, Pitfalls, and Optimization

While virtual threads are powerful, they are not a silver bullet. Recent Java performance news and JVM news discussions highlight specific scenarios where virtual threads can degrade performance if used incorrectly. Understanding these pitfalls is crucial for engineers working with Amazon Corretto news, Azul Zulu news, or BellSoft Liberica news distributions.

The “Pinning” Problem

The most critical issue to be aware of is “pinning.” A virtual thread is pinned to its carrier thread if it performs a blocking operation while inside a synchronized block or a native method. When pinned, the JVM cannot unmount the virtual thread, blocking the underlying OS thread. This negates the benefits of virtual threads.

To fix this, Java security news and concurrency experts recommend replacing synchronized with ReentrantLock where possible in code paths executed by virtual threads.

import java.util.concurrent.locks.ReentrantLock;

public class PinningAvoidance {

private final ReentrantLock lock = new ReentrantLock();

private int counter = 0;

// BAD: This pins the carrier thread during the sleep

public synchronized void badIncrement() {

try {

Thread.sleep(100);

counter++;

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

}

// GOOD: This allows the virtual thread to unmount during sleep

public void goodIncrement() {

lock.lock();

try {

Thread.sleep(100);

counter++;

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

} finally {

lock.unlock();

}

}

}Pooling and ThreadLocals

Another common mistake involves thread pooling. In the past, Java 11 news or Java 17 news might have recommended tuning thread pools. With virtual threads, do not pool them. Creating a virtual thread is so cheap that pooling adds unnecessary overhead. Always create a new virtual thread per task.

Furthermore, be cautious with ThreadLocal. Since you might spawn millions of virtual threads, using heavy objects in ThreadLocal can lead to massive heap usage (footprint bloat). This is a frequent topic in Java virtual threads news. Consider using Scoped Values (another Project Loom preview feature) as a lighter alternative.

Ecosystem Impact: From JobRunr to JavaFX

The ripple effect of virtual threads is being felt across the entire ecosystem.

JobRunr news suggests that background job processing libraries are adapting to utilize virtual threads for higher throughput.

JavaFX news indicates that while the UI thread remains a platform thread, background tasks in desktop applications can leverage virtual threads for responsiveness without complexity.

Even in the embedded space, Java ME news and Java Card news enthusiasts are watching these developments, though the resource constraints there make full adoption slower compared to cloud-native environments.

For those in the enterprise space following Oracle Java news or Adoptium news, the stability of virtual threads in LTS releases makes them “production ready.” It also simplifies the learning curve for new developers, a positive note for Java low-code news and education.

Conclusion

Java Virtual Threads represent a return to simplicity. They allow developers to write code that looks synchronous—easy to read, debug, and profile—while achieving the scalability previously reserved for asynchronous, reactive systems. By decoupling the application unit of concurrency from the operating system unit of concurrency, Java has secured its place in the future of high-scale cloud computing.

As you integrate these changes, remember to monitor your applications using tools like Java Flight Recorder (JFR). Keep an eye on Project Panama news and Project Valhalla news as well; while Loom handles concurrency, Panama handles native access, and Valhalla handles memory layout. Together, they form the trinity of modern Java modernization. Whether you are building AI agents with LangChain4j, microservices with Spring Boot, or robust enterprise apps with Jakarta EE, virtual threads are your new foundation for performance.