The Java ecosystem is in the midst of a renaissance, driven by a rapid cadence of innovation and the powerful features introduced in recent Long-Term Support (LTS) releases like Java 21. For enterprise developers, this evolution reaches a pivotal moment with the convergence of modern JVM capabilities and the robust standards of Jakarta EE. The upcoming Jakarta EE 11 release, coupled with forward-thinking application servers like Apache Tomcat 11, is set to redefine performance and scalability for server-side Java. This powerful combination brings Project Loom’s virtual threads to the forefront of enterprise development, promising to solve long-standing concurrency challenges with remarkable elegance.

This shift allows developers to write simple, synchronous, blocking code that scales massively, effectively dismantling the need for complex asynchronous patterns in many common use cases. This article dives deep into this new paradigm, exploring the core concepts of Jakarta EE 11 and virtual threads, demonstrating their practical implementation with code examples, and outlining the best practices necessary to harness their full potential. Prepare to see how the “thread-per-request” model is being revitalized to handle millions of concurrent connections, ushering in a new era of high-performance enterprise applications.

Jakarta EE 11: Embracing the Future of the JVM

For years, the evolution of Java EE, and now Jakarta EE, has been about standardizing robust APIs for enterprise needs. With Jakarta EE 11, the platform takes a significant leap forward by aligning itself directly with the modern features of the Java platform, most notably those introduced in Java 21. This alignment is not just about compatibility; it’s about fundamentally integrating new JVM paradigms to enhance developer productivity and application performance.

What’s New in Jakarta EE 11?

The primary theme of Jakarta EE 11 is the strategic adoption of modern Java features. While specifications across the board are being updated, the most impactful change comes from the Jakarta Concurrency 3.1 specification. This update formally introduces support for two game-changing features from Project Loom: Virtual Threads and Structured Concurrency. This means that for the first time, the enterprise standard provides a managed, standardized way to leverage these powerful concurrency models within Jakarta EE applications. This move is a crucial piece of Jakarta EE news, signaling a shift from reactive and callback-heavy code towards a simpler, more maintainable synchronous style that performs at scale.

Understanding Virtual Threads (Project Loom)

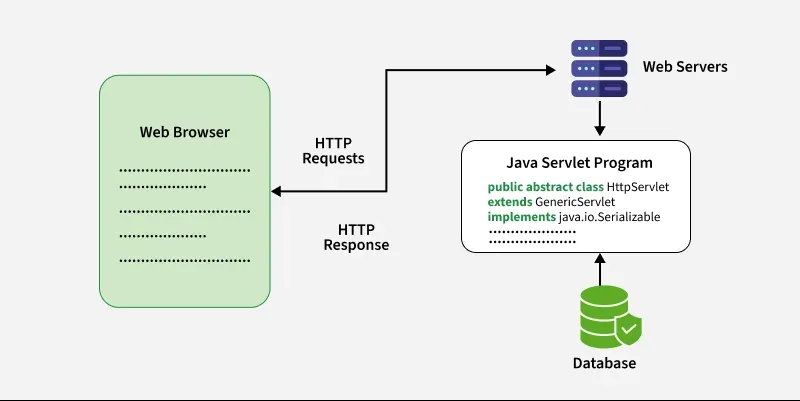

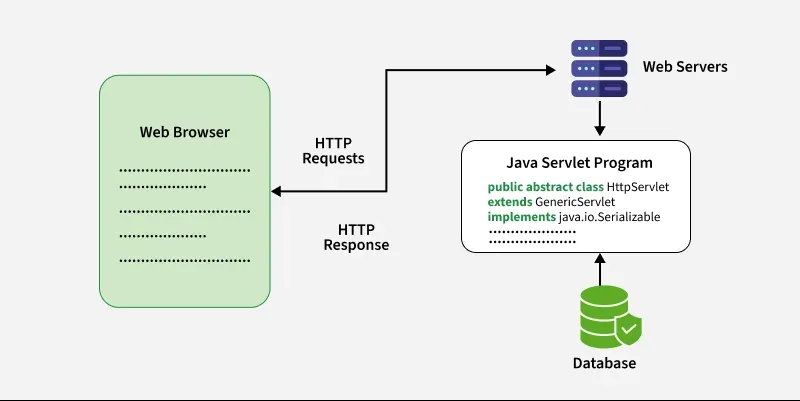

To appreciate the impact of Jakarta EE 11, one must first understand virtual threads. For decades, Java concurrency has been built on platform threads, which are thin wrappers around operating system (OS) threads. These are heavyweight resources; an OS can only handle a few thousand of them efficiently. This limitation led to the traditional “thread-per-request” model becoming a bottleneck for applications with high I/O (e.g., microservice calls, database queries).

Virtual threads, a cornerstone of the Project Loom news, solve this problem. They are lightweight, user-mode threads managed by the JVM, not the OS. A single platform thread (called a carrier thread) can run millions of virtual threads by mounting and unmounting them as they perform blocking operations. When a virtual thread blocks on I/O, the JVM unmounts it from its carrier thread and can use that carrier thread to run another virtual thread. This makes blocking operations virtually free from a resource perspective.

Here’s a simple comparison of creating a platform thread versus a virtual thread:

import java.time.Duration;

public class ThreadComparison {

public static void main(String[] args) throws InterruptedException {

// Traditional Platform Thread

Thread platformThread = Thread.ofPlatform().name("my-platform-thread").start(() -> {

System.out.println("Running on platform thread: " + Thread.currentThread());

});

platformThread.join();

// New Virtual Thread (requires Java 21+)

Thread virtualThread = Thread.ofVirtual().name("my-virtual-thread").start(() -> {

System.out.println("Running on virtual thread: " + Thread.currentThread());

try {

// Simulating a blocking I/O call

Thread.sleep(Duration.ofSeconds(1));

} catch (InterruptedException e) {

e.printStackTrace();

}

});

virtualThread.join();

System.out.println("Both threads have completed.");

}

}

While the creation looks similar, the underlying mechanics are worlds apart. The latest JVM news confirms that this feature is production-ready in Java 21, paving the way for its adoption in the broader Java ecosystem news.

Putting Virtual Threads to Work in Jakarta EE Applications

The true power of virtual threads is realized when they are integrated seamlessly into the application server runtime. This is precisely what servers like Apache Tomcat 11, built for Jakarta EE 11, are designed to do. They can be configured to use a virtual thread for every incoming request, revolutionizing the classic “thread-per-request” model.

The “Thread-per-Request” Model, Reimagined

In the past, to avoid exhausting the server’s platform thread pool, developers turned to complex, non-blocking, and reactive programming models. These models, while powerful, often lead to harder-to-read and harder-to-debug code (often dubbed “callback hell”).

With virtual threads, this complexity is no longer a prerequisite for scalability. An application server can now spawn a new virtual thread for each of the tens of thousands of concurrent requests it receives. When a request handler makes a blocking database call using JDBC or calls another REST service, the virtual thread is unmounted, freeing the underlying carrier thread to do other work. The developer writes simple, sequential, blocking code, and the runtime provides the scalability automatically. This is a major development in Java performance news and a welcome relief for developers maintaining complex reactive systems.

Code Example: A Scalable Jakarta REST Endpoint

Consider a standard Jakarta REST (formerly JAX-RS) resource. The beauty of this new model is that your application code often doesn’t need to change at all. The following code, when deployed on a virtual-thread-enabled server, will scale to handle a massive number of concurrent requests without modification.

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.net.URI;

@Path("/data")

@ApplicationScoped

public class DataResource {

private final HttpClient client = HttpClient.newHttpClient();

@GET

@Produces(MediaType.TEXT_PLAIN)

public String getExternalData() throws Exception {

// This looks like a simple, blocking network call.

// On a virtual thread, the underlying carrier thread is not blocked.

System.out.println("Handling request on: " + Thread.currentThread());

HttpRequest request = HttpRequest.newBuilder()

.uri(new URI("https://api.example.com/data"))

.GET()

.build();

// The virtual thread "sleeps" here, but the OS thread is free.

HttpResponse<String> response = client.send(request, HttpResponse.BodyHandlers.ofString());

// Some processing on the result

return "Data fetched successfully: " + response.body().substring(0, 20) + "...";

}

}

In this example, the `client.send()` call is a blocking I/O operation. In a traditional setup, this would tie up a precious platform thread. With virtual threads, the JVM parks this thread and uses the OS thread for other tasks. This simple, easy-to-read code now has the performance characteristics previously only achievable with complex asynchronous APIs. This is a recurring theme in recent Java 21 news and is being adopted not just in Jakarta EE but also in the Spring Boot news, where Project Loom support is a major feature.

Leveraging Structured Concurrency and Modern APIs

While virtual threads simplify scalability for standard request handling, Jakarta EE 11 goes further by embracing Structured Concurrency through the Jakarta Concurrency 3.1 API. This provides a robust framework for managing complex, multi-threaded tasks within your application logic.

Jakarta Concurrency 3.1: A New Era of Reliability

Structured Concurrency is an API that simplifies concurrent programming by treating multiple tasks running in different threads as a single unit of work. It ensures that if one task fails, the others can be reliably cancelled, and the parent thread only continues after all child threads have terminated. This prevents thread leaks and makes concurrent code much easier to reason about and debug.

Here is an example of using `StructuredTaskScope` within a Jakarta CDI bean to fetch data from two different services in parallel. This is a common pattern in microservice architectures.

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

import java.util.concurrent.Future;

import java.util.concurrent.StructuredTaskScope;

// A record to hold the combined result

record UserProfile(String userDetails, String userOrders) {}

@ApplicationScoped

public class UserProfileService {

@Inject

private UserDetailsService userDetailsService; // Assume this is another CDI bean

@Inject

private OrderService orderService; // Assume this is another CDI bean

public UserProfile fetchUserProfile(String userId) throws Exception {

// StructuredTaskScope ensures both tasks are treated as a single unit.

// If one fails, the other is cancelled, and the scope closes.

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

// Fork the first task to fetch user details

Future<String> userDetailsFuture = scope.fork(() -> userDetailsService.fetchDetails(userId));

// Fork the second task to fetch user orders

Future<String> userOrdersFuture = scope.fork(() -> orderService.fetchOrders(userId));

// Wait for both tasks to complete successfully. If either throws an

// exception, join() will rethrow it.

scope.join();

scope.throwIfFailed(); // Propagate failure

// If both succeeded, combine the results

return new UserProfile(userDetailsFuture.resultNow(), userOrdersFuture.resultNow());

}

}

}

This code is far more reliable and readable than chaining `CompletableFuture` instances. The latest Java structured concurrency news highlights this as a major step forward for writing safe and maintainable concurrent code, a key aspect of modern Java wisdom tips news.

Best Practices and Considerations for the New Paradigm

Adopting virtual threads is powerful, but it’s not a silver bullet. Developers need to be aware of new patterns and potential pitfalls to maximize the benefits and avoid performance issues. This is crucial for anyone following Java performance news.

When to Use (and Not Use) Virtual Threads

- Ideal for I/O-bound tasks: Virtual threads excel where the code spends most of its time waiting for network responses, database results, or file system operations. This covers the vast majority of enterprise application workloads.

- Not for CPU-bound tasks: For tasks that are computationally intensive (e.g., complex calculations, data transformations), virtual threads offer no advantage. The number of available CPU cores is the limiting factor, and traditional platform threads are still the right tool for this job.

Common Pitfalls to Avoid

- Thread Pinning: This is the most critical pitfall. A virtual thread becomes “pinned” to its carrier platform thread when it executes code inside a `synchronized` block or a native (JNI) method. While pinned, the carrier thread cannot be used to run other virtual threads, effectively negating the benefits. The solution is to replace `synchronized` blocks with `java.util.concurrent.locks.ReentrantLock`.

- Over-reliance on Thread-Local Variables: Because an application can now have millions of virtual threads, using `ThreadLocal` can lead to massive memory consumption if not managed carefully. Java 21 introduces Scoped Values as a modern, more efficient alternative, which is a key topic in recent OpenJDK news.

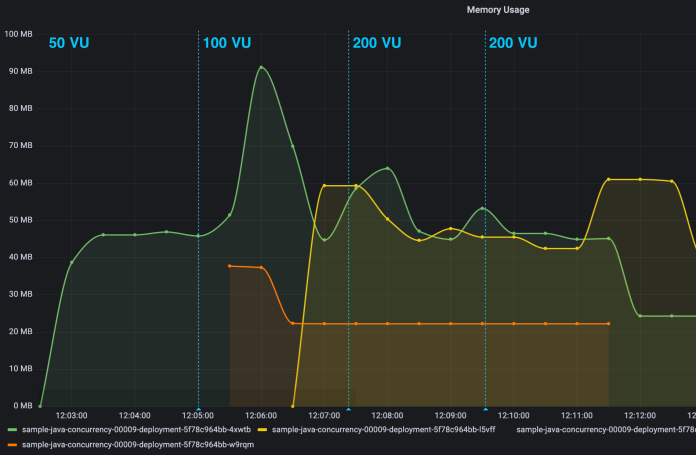

- Undersized Resource Pools: Your application can now handle thousands more concurrent requests. However, if your database connection pool (e.g., in Hibernate or another JPA provider) is still sized at 10 or 20, it will become the new bottleneck. You must resize connection pools and other limited resources to match the new level of concurrency your application can support. This is an important consideration for anyone following Hibernate news or Maven news related to dependency management for database drivers.

Conclusion

The arrival of Jakarta EE 11, championed by runtimes like Apache Tomcat 11, represents a monumental shift for the enterprise Java landscape. By natively integrating virtual threads and structured concurrency from Java 21, the platform empowers developers to build highly scalable, resilient, and performant applications with simpler, more maintainable code. The long-standing trade-off between developer productivity and raw performance is being effectively erased for a huge class of I/O-bound applications.

The key takeaways are clear: the “thread-per-request” model is back and more powerful than ever; blocking I/O is no longer a scalability killer; and structured concurrency provides a safer, more robust way to manage complex tasks. As a developer, now is the time to familiarize yourself with these concepts. Start by upgrading your projects to use Java 21, explore the milestone releases of Jakarta EE 11 compatible servers, and begin refactoring away from legacy `synchronized` blocks. This is not just an incremental update; it is a paradigm shift that will shape the future of the Java ecosystem news for years to come.