The Dawn of a New Era in Java Concurrency

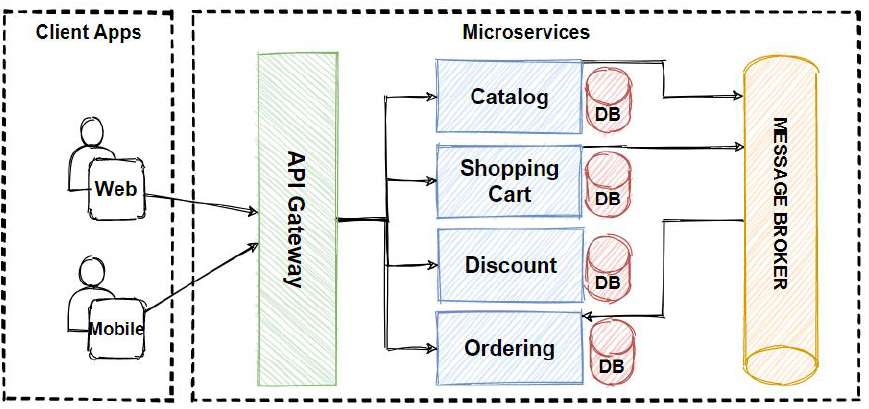

For decades, Java’s concurrency model has been a cornerstone of its power, enabling robust, multi-threaded applications. However, this model, built upon a direct one-to-one mapping between Java threads and precious operating system (OS) threads, has long been a bottleneck for scalability. The traditional “thread-per-request” architecture, while simple to reason about, struggles under the immense load of modern microservices and I/O-bound applications. Every blocked thread waiting for a database or network call would hold onto an expensive OS thread, limiting the number of concurrent requests a server could handle. This has been a central topic in recent Java news and JVM news.

Enter Project Loom. After years in development and several preview releases, its flagship feature—virtual threads—has officially landed as a production-ready feature in the Java 21 LTS release. This isn’t just an incremental update; it’s a paradigm shift. Virtual threads are lightweight, JVM-managed threads that dramatically increase application throughput by allowing hundreds of thousands, or even millions, of concurrent tasks to run on a handful of OS threads. The latest Spring Boot news confirms this revolution, with version 3.2 offering first-class support for virtual threads, making this powerful feature more accessible than ever. This article dives deep into what virtual threads are, how to use them effectively in modern frameworks, and the best practices to unlock their full potential.

Section 1: Understanding the Core Concepts of Virtual Threads

To truly appreciate the impact of virtual threads, it’s essential to understand how they differ from the platform threads we’ve used since Java’s inception. This fundamental change is a major highlight in the latest Java 21 news.

What Are Virtual Threads vs. Platform Threads?

A platform thread is the traditional java.lang.Thread we are all familiar with. It’s a thin wrapper around an OS thread. Creating a platform thread is an expensive operation because it requires a system call and allocates a significant amount of memory for the thread’s stack. Consequently, applications are limited to a few thousand platform threads at most, making thread pooling a necessity.

A virtual thread, on the other hand, is a lightweight thread managed entirely by the Java Virtual Machine (JVM). It does not have a dedicated OS thread. Instead, the JVM runs virtual threads on a small pool of platform threads, known as carrier threads. When a virtual thread executes a blocking I/O operation (like reading from a socket or waiting for a database query), the JVM automatically unmounts it from its carrier thread and mounts another runnable virtual thread in its place. The original virtual thread is parked until its blocking operation completes, at which point it becomes eligible to be mounted on any available carrier thread to resume its work. This “parking” and “unparking” is incredibly efficient and is the secret to their scalability.

Creating and Running Your First Virtual Threads

The beauty of the virtual threads API, a key piece of recent Project Loom news, is its simplicity and familiarity. You can create a virtual thread in a couple of straightforward ways.

The most direct method is using the new Thread.ofVirtual() factory method. For more structured and manageable concurrency, the recommended approach is to use an ExecutorService created specifically for virtual threads.

import java.time.Duration;

import java.util.concurrent.Executors;

import java.util.stream.IntStream;

public class VirtualThreadDemo {

public static void main(String[] args) throws InterruptedException {

// Method 1: Using a Virtual Thread per Task Executor

// This is the preferred way for managing multiple tasks.

System.out.println("Starting tasks with Virtual Thread Executor...");

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

IntStream.range(0, 10_000).forEach(i -> {

executor.submit(() -> {

Thread.sleep(Duration.ofSeconds(1));

System.out.println("Task " + i + " completed on thread: " + Thread.currentThread());

return i;

});

});

} // executor.close() is called automatically, waits for all tasks to finish

System.out.println("\nAll executor tasks are done.\n");

// Method 2: Creating a single virtual thread directly

System.out.println("Starting a single direct virtual thread...");

Thread virtualThread = Thread.ofVirtual().start(() -> {

System.out.println("Direct task running on thread: " + Thread.currentThread());

});

virtualThread.join(); // Wait for the single virtual thread to complete

System.out.println("Direct virtual thread task is done.");

}

}

In this example, we effortlessly launch 10,000 tasks that each sleep for one second. With traditional platform threads, this would require 10,000 OS threads, crashing most systems. With virtual threads, this runs smoothly on a small number of carrier threads, showcasing the immense leap in Java performance news.

Section 2: Practical Implementation in the Modern Java Ecosystem

Theory is one thing, but the real excitement comes from applying virtual threads in real-world applications. The Java ecosystem news is buzzing with framework integrations that make this transition seamless.

Unlocking Throughput in Spring Boot 3.2

One of the most significant pieces of Spring news is the out-of-the-box support for virtual threads in Spring Boot 3.2. Enabling them for your web application is as simple as adding a single line to your configuration file.

In your src/main/resources/application.properties file, add the following property:

# Enable virtual threads for handling web requests

spring.threads.virtual.enabled=true

With this configuration, Spring Boot will configure its embedded Tomcat server to use a virtual thread for every incoming HTTP request. This means your controllers, services, and repositories that perform blocking I/O will no longer tie up a precious platform thread.

Consider a simple REST controller that simulates fetching data from two different downstream services, a classic I/O-bound scenario.

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestTemplate;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

@RestController

public class DataAggregatorController {

private final RestTemplate restTemplate = new RestTemplate();

@GetMapping("/aggregate")

public Map<String, String> getAggregatedData() {

// With virtual threads enabled, these two blocking calls

// will not monopolize platform threads.

System.out.println("Handling request on thread: " + Thread.currentThread());

// Simulate fetching user data from one service

String userData = restTemplate.getForObject("https://api.example.com/users/1", String.class);

// Simulate fetching order data from another service

String orderData = restTemplate.getForObject("https://api.example.com/orders/1", String.class);

Map<String, String> response = new ConcurrentHashMap<>();

response.put("user", userData);

response.put("orders", orderData);

return response;

}

}

Without virtual threads, each concurrent call to /aggregate would consume one platform thread from Tomcat’s limited pool for the entire duration of the two network calls. With virtual threads enabled, the application can now handle tens of thousands of concurrent requests, as the carrier threads are freed up while the virtual threads wait for the network responses. This has massive implications for services that interact with databases, a topic often covered in Hibernate news, as blocking JDBC calls now behave in a non-blocking fashion from the carrier thread’s perspective.

Section 3: Advanced Concepts and Structured Concurrency

While virtual threads are easy to adopt, mastering them involves understanding a few advanced concepts to avoid common pitfalls and write more robust, maintainable concurrent code. This is where the latest Java concurrency news gets particularly interesting.

The Pitfall of Thread Pinning

The magic of virtual threads relies on the JVM’s ability to unmount them from carrier threads during blocking operations. However, certain situations can “pin” a virtual thread to its carrier, preventing it from being unmounted. This negates the benefits of virtual threads and can lead to carrier thread pool starvation.

The two primary causes of pinning are:

- Executing code inside a

synchronizedblock or method. - Executing a native method (JNI) or a foreign function.

When a virtual thread enters a synchronized block, it cannot be unmounted because the JVM’s locking mechanism is tied to the underlying platform thread. If the virtual thread then performs a blocking I/O call from within that synchronized block, the carrier thread is stuck—it is pinned.

The solution is to replace synchronized blocks with java.util.concurrent.locks.ReentrantLock.

import java.util.concurrent.locks.ReentrantLock;

public class PinningExample {

// AVOID: This can cause pinning if a blocking operation happens inside

private final Object lockObject = new Object();

public void performTaskWithSynchronization() {

synchronized (lockObject) {

// If a blocking I/O call happens here, the carrier thread is pinned.

System.out.println("Inside synchronized block on " + Thread.currentThread());

// e.g., someBlockingNetworkCall();

}

}

// PREFER: This is the non-pinning alternative

private final ReentrantLock reentrantLock = new ReentrantLock();

public void performTaskWithReentrantLock() {

reentrantLock.lock();

try {

// A blocking I/O call here will NOT pin the carrier thread.

// The virtual thread will be unmounted.

System.out.println("Inside ReentrantLock block on " + Thread.currentThread());

// e.g., someBlockingNetworkCall();

} finally {

reentrantLock.unlock();

}

}

}

This is a critical piece of Java wisdom tips news for developers migrating to virtual threads: audit your code for synchronized blocks that perform I/O and refactor them to use modern concurrency utilities.

Embracing Structured Concurrency

Complementing virtual threads is another feature from Project Loom: Structured Concurrency (in preview in Java 21). It aims to simplify concurrent programming by treating multiple tasks running in different threads as a single unit of work. This improves reliability and observability, making it easier to handle errors and cancellations.

The primary tool for this is the StructuredTaskScope. It ensures that if a task starts several concurrent subtasks, it must wait for all of them to complete before it can proceed. This “fork-join” style enforces a clear structure and lifetime for concurrent operations.

import java.time.Instant;

import java.util.concurrent.Future;

import jdk.incubator.concurrent.StructuredTaskScope;

public class StructuredConcurrencyDemo {

// Represents a long-running I/O operation

String fetchUserData() throws InterruptedException {

Thread.sleep(1000);

return "User Details";

}

// Represents another long-running I/O operation

Integer fetchOrderCount() throws InterruptedException {

Thread.sleep(1500);

return 42;

}

// A class to hold the combined result

record UserProfile(String details, Integer orderCount) {}

public UserProfile getUserProfile() throws InterruptedException {

// All subtasks within this scope are treated as one unit.

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

// Fork: Start two concurrent subtasks. These will run on virtual threads.

Future<String> userFuture = scope.fork(this::fetchUserData);

Future<Integer> orderFuture = scope.fork(this::fetchOrderCount);

// Join: Wait for both subtasks to complete. If one fails, the other is cancelled.

scope.join();

scope.throwIfFailed(); // Throws an exception if any subtask failed

// Success: Combine the results

return new UserProfile(userFuture.resultNow(), orderFuture.resultNow());

}

}

public static void main(String[] args) throws InterruptedException {

var demo = new StructuredConcurrencyDemo();

System.out.println("Fetching user profile at " + Instant.now());

UserProfile profile = demo.getUserProfile();

System.out.println("Profile received at " + Instant.now() + ": " + profile);

}

}

This example elegantly fetches user data and order counts concurrently. With StructuredTaskScope, the parent method’s lifetime is tied to its children. If fetchOrderCount() were to fail, the scope would automatically cancel the fetchUserData() task (if it’s still running) and the entire operation would fail fast. This is a huge leap forward for writing robust concurrent logic, a highlight in Java structured concurrency news.

Section 4: Best Practices and Performance Considerations

To maximize the benefits of virtual threads, it’s important to adopt a new mindset and follow some key best practices.

1. Do Not Pool Virtual Threads

The practice of pooling platform threads is an optimization to avoid the high cost of their creation. Virtual threads are extremely cheap to create. The correct approach is to create a new virtual thread for every application task. The anti-pattern is to create a pool of virtual threads. Use Executors.newVirtualThreadPerTaskExecutor() which does exactly this: it creates a new virtual thread for each submitted task.

2. Use for I/O-Bound, Not CPU-Bound, Tasks

Virtual threads provide a throughput advantage for tasks that spend most of their time waiting (I/O-bound). For tasks that are computationally intensive (CPU-bound), a traditional thread pool of platform threads, sized to the number of CPU cores, remains the optimal choice. Running CPU-bound code on virtual threads offers no performance benefit and can even be slightly detrimental due to the extra JVM management overhead.

3. Update Your Tooling and Libraries

Ensure your entire toolchain, from your build tools (check for the latest Maven news and Gradle news) to your monitoring and profiling tools, supports Java 21 and virtual threads. JDK Flight Recorder (JFR) and modern profilers are now virtual-thread-aware, which is crucial for debugging and performance tuning. Similarly, ensure your libraries (e.g., database drivers, HTTP clients) have been updated to work seamlessly in a virtual thread environment.

4. Be Mindful of Thread-Local Variables

While ThreadLocal variables work with virtual threads, their use should be carefully considered. Because an application might now have millions of virtual threads, extensive use of thread-locals could lead to significant memory consumption. If you must use them, ensure their lifecycle is managed properly and they are cleared after use.

Conclusion: The Future is Concurrent and Simple

Java virtual threads are not just another feature; they represent a fundamental evolution of the JVM and a re-imagining of Java concurrency. By abstracting away the limitations of OS threads, Project Loom delivers on its promise of making high-throughput, concurrent programming simple and accessible. The “thread-per-request” model, once a scalability anti-pattern, is now a viable and highly efficient architecture for modern applications.

The rapid adoption by major frameworks like Spring Boot is a testament to the feature’s importance and readiness for production. As developers, our next steps are clear: upgrade to Java 21, explore the seamless integration offered by frameworks like Spring Boot 3.2, and start refactoring I/O-bound code to leverage this new power. By understanding the core concepts, avoiding pitfalls like pinning, and embracing structured concurrency, we can build the next generation of scalable, resilient, and performant Java applications. The latest Java virtual threads news marks a turning point, and the future of the Java ecosystem has never looked brighter.