Introduction: The Paradigm Shift in the JVM

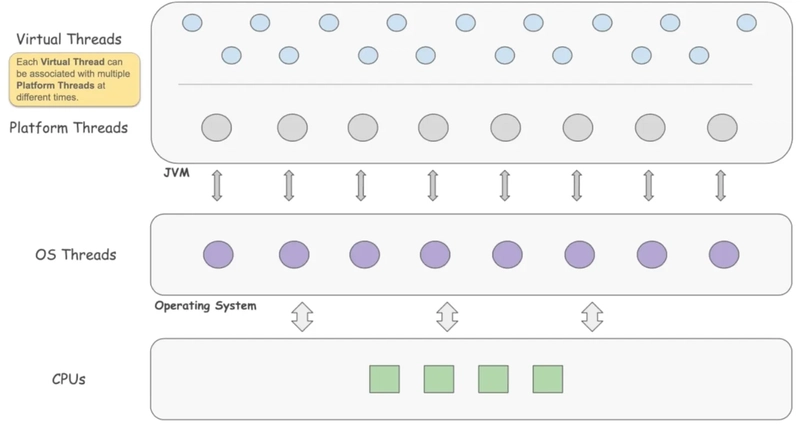

The landscape of Java development has undergone a seismic shift with the introduction and finalization of Virtual Threads. For over two decades, Java developers have navigated the complexities of concurrency using Platform Threads—wrappers around operating system (OS) threads. While robust, this model hit a ceiling regarding scalability. As modern applications, particularly microservices and cloud-native architectures, demanded higher throughput, the “one thread per request” model became a bottleneck due to the heavy resource footprint of OS threads.

In recent Java news, the arrival of Project Loom and the subsequent delivery of Virtual Threads in Java 21 news (and previewed in Java 19) has been hailed as the most significant change to the language since Lambdas in Java 8 news. This innovation allows the Java Virtual Machine (JVM) to manage millions of lightweight threads with the same hardware resources that previously struggled with thousands of platform threads.

This article explores the technical depths of Virtual Threads, their implementation, and how frameworks like Helidon and Spring Boot are adapting. We will look at how this impacts Java performance news and why it renders the complex reactive programming model largely unnecessary for many use cases. Whether you follow Oracle Java news, Adoptium news, or Azul Zulu news, the consensus is clear: Virtual Threads are the future of high-concurrency Java applications.

Section 1: Core Concepts and the “Thread-per-Request” Renaissance

To understand the magnitude of this change, we must look at the limitations of the traditional thread model. In the classic implementation, a java.lang.Thread maps 1:1 to a kernel thread. When code performs a blocking I/O operation (like a database query or an HTTP call), that kernel thread effectively stalls, holding onto memory (stack space) and OS resources while doing nothing but waiting.

Java Virtual Threads news highlights a decoupling of this relationship. A Virtual Thread is an instance of java.lang.Thread that is not tied to a specific OS thread for its entire lifetime. instead, the JVM schedules virtual threads onto a small pool of “carrier” threads (platform threads). When a virtual thread performs a blocking I/O operation, the JVM unmounts it from the carrier thread, leaving the carrier free to execute other virtual threads. When the I/O completes, the virtual thread is rescheduled.

This mechanism revives the “Thread-per-Request” style of programming. Developers can write simple, synchronous, imperative code that is easy to read, debug, and profile, while achieving the scalability previously reserved for asynchronous frameworks like WebFlux or RxJava.

Creating Virtual Threads

The API has been updated to make creating these threads intuitive. You no longer need complex thread pools for task management because virtual threads are cheap to create and dispose of.

public class VirtualThreadDemo {

public static void main(String[] args) throws InterruptedException {

// 1. Creating a virtual thread using the static builder

Thread vThread = Thread.ofVirtual()

.name("my-virtual-thread")

.start(() -> {

System.out.println("Running in: " + Thread.currentThread());

try {

// This blocking call unmounts the virtual thread

// The underlying carrier thread is freed immediately

Thread.sleep(1000);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

System.out.println("Woke up in: " + Thread.currentThread());

});

// 2. Using the Thread.startVirtualThread convenience method

Thread vThread2 = Thread.startVirtualThread(() -> {

System.out.println("Second virtual thread running");

});

// Ensure main thread waits, as virtual threads are daemon threads by default

vThread.join();

vThread2.join();

}

}In the context of Java concurrency news, note that virtual threads are daemon threads by default. If the main thread finishes, the JVM will exit regardless of whether virtual threads are running, unlike platform threads which can keep the JVM alive.

Section 2: Implementation Details and Executor Services

While manually creating threads is useful for demos, real-world applications typically use ExecutorService to manage task execution. In the past, Java wisdom tips news always emphasized sizing your thread pool carefully to match the number of CPU cores or expected I/O wait times. With virtual threads, this logic changes drastically.

You do not pool virtual threads. Pooling is designed to share expensive resources. Since virtual threads are disposable and lightweight, you simply create a new one for every task. The Executors class has been updated with a new factory method: newVirtualThreadPerTaskExecutor().

Migrating from Fixed Thread Pools

Here is a practical example of how one might handle multiple tasks. In a traditional setup, submitting 10,000 tasks to a fixed thread pool of 100 threads would create a bottleneck. With virtual threads, the JVM handles this effortlessly.

import java.time.Duration;

import java.time.Instant;

import java.util.concurrent.Executors;

import java.util.concurrent.ExecutorService;

import java.util.stream.IntStream;

public class MassiveConcurrencyExample {

public static void main(String[] args) {

long startTime = System.currentTimeMillis();

// A try-with-resources block automatically shuts down the executor

// This is part of the new structured concurrency approach in newer Java versions

try (ExecutorService executor = Executors.newVirtualThreadPerTaskExecutor()) {

IntStream.range(0, 10_000).forEach(i -> {

executor.submit(() -> {

// Simulate a blocking operation (e.g., DB query or HTTP request)

try {

Thread.sleep(Duration.ofMillis(500));

} catch (InterruptedException e) {

// Handle interruption

}

return i;

});

});

// Implicitly calls executor.close(), which waits for all tasks to complete

}

long endTime = System.currentTimeMillis();

System.out.println("Processed 10,000 tasks in: " + (endTime - startTime) + "ms");

}

}This shift impacts the entire ecosystem. Spring Boot news and Jakarta EE news have been dominated by updates to support this model. For instance, Tomcat and Jetty can now be configured to use virtual threads for request handling, instantly boosting the throughput of blocking applications without code changes.

Furthermore, this impacts Hibernate news. Previously, reactive database drivers were required for non-blocking database access. Now, standard JDBC drivers running on virtual threads can achieve similar concurrency levels without the cognitive overhead of reactive chains.

Section 3: Advanced Techniques and Structured Concurrency

Virtual threads are only half the story. Project Loom news also introduced the concept of Structured Concurrency (currently in preview or incubating depending on the exact Java version, but stabilizing rapidly). Structured concurrency treats multiple tasks running in different threads as a single unit of work. This streamlines error handling and cancellation, ensuring that if one sub-task fails, the others can be automatically cancelled, preventing thread leaks.

This is vital for Java security news and reliability, as it prevents “orphaned” threads from consuming resources indefinitely. It replaces the often error-prone CompletableFuture chaining.

Using StructuredTaskScope

Below is an example using StructuredTaskScope, which is the modern way to coordinate concurrent sub-tasks. This pattern is essential for developers following Java ecosystem news.

import java.util.concurrent.ExecutionException;

import java.util.concurrent.StructuredTaskScope;

import java.util.concurrent.StructuredTaskScope.Subtask;

import java.util.function.Supplier;

public class StructuredConcurrencyDemo {

// Simulating a user data fetch

static String fetchUser() throws InterruptedException {

Thread.sleep(100);

return "User: John Doe";

}

// Simulating an order history fetch

static String fetchOrders() throws InterruptedException {

Thread.sleep(200);

return "Orders: [A, B, C]";

}

public static void main(String[] args) {

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

// Forking tasks creates virtual threads

Subtask userTask = scope.fork(StructuredConcurrencyDemo::fetchUser);

Subtask orderTask = scope.fork(StructuredConcurrencyDemo::fetchOrders);

// Wait for all tasks to finish or for the first failure

scope.join();

// Throw exception if any subtask failed

scope.throwIfFailed();

// Results are available synchronously

String user = userTask.get();

String orders = orderTask.get();

System.out.println(user + ", " + orders);

} catch (InterruptedException | ExecutionException e) {

System.err.println("Task failed: " + e.getMessage());

}

}

} This pattern is significantly cleaner than nested callbacks. It is particularly relevant for those following Spring AI news or LangChain4j news, where an application might need to parallelize calls to multiple LLM providers or vector databases and aggregate the results. If one call fails or times out, structured concurrency ensures the entire operation fails gracefully.

Frameworks: Helidon Níma and Beyond

The industry is reacting fast. Helidon Níma is a prime example of a microservices framework built from the ground up to leverage virtual threads. Unlike Netty-based frameworks that rely on event loops and non-blocking I/O, Helidon Níma uses a synchronous model on top of virtual threads. This provides the performance of asynchronous frameworks with the simplicity of blocking code.

Similarly, Quarkus and Micronaut are integrating these features. Even JobRunr news suggests that background job processing libraries are optimizing their workers to utilize virtual threads, allowing for thousands of concurrent background jobs with minimal memory overhead.

Section 4: Best Practices, Optimization, and Pitfalls

While virtual threads are powerful, they are not a silver bullet. Following Java self-taught news or community discussions (sometimes jokingly referred to as Java psyop news when the hype gets too loud) reveals that there are specific pitfalls to avoid.

1. The Pinning Problem

The most critical issue currently is “pinning.” This occurs when a virtual thread executes a synchronized block or calls a native method. When pinned, the virtual thread cannot be unmounted from the carrier thread during blocking operations. This effectively turns the virtual thread back into a platform thread, negating the scalability benefits.

Best Practice: Use ReentrantLock instead of synchronized blocks if you anticipate blocking I/O inside the critical section.

import java.util.concurrent.locks.ReentrantLock;

public class PinningAvoidance {

private final ReentrantLock lock = new ReentrantLock();

public void safeMethod() {

lock.lock();

try {

// Doing I/O here is safe with ReentrantLock

// The virtual thread will unmount, releasing the carrier thread

Thread.sleep(100);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

} finally {

lock.unlock();

}

}

public synchronized void riskyMethod() {

// Doing I/O here "pins" the virtual thread to the carrier

// The carrier thread is blocked!

try {

Thread.sleep(100);

} catch (InterruptedException e) {}

}

}2. Thread Local Pollution

Virtual threads support ThreadLocal, but because you might create millions of them, using heavy objects in thread-locals can lead to massive memory usage (heap exhaustion). Project Valhalla news and Scoped Values (JEP 429) aim to solve this by providing immutable, sharable data across threads, but until then, be cautious with ThreadLocal.

3. Do Not Pool

As mentioned in Java 11 news and earlier, pooling was key. In the era of Java 21 news, pooling virtual threads is an anti-pattern. The cost of managing the pool is higher than creating the thread.

4. Tooling and Observability

Ensure your monitoring tools (like Amazon Corretto Profiler or BellSoft Liberica Mission Control) are updated. Traditional thread dumps might be unreadable if you have a million threads. New JDK tools generate structured dumps (JSON format) to handle this volume.

Conclusion: The Future is Virtual

The introduction of Virtual Threads marks a turning point in Java SE news. It democratizes high-concurrency programming, making it accessible to developers without requiring a PhD in reactive streams. By allowing code to be written in a synchronous style while executing asynchronously, Java bridges the gap between ease of use and high performance.

As we look toward the future, keeping an eye on OpenJDK news is essential. Features like Structured Concurrency and Scoped Values will graduate from preview to standard, further solidifying this new model. Whether you are building low-latency trading systems, high-throughput microservices with Helidon Níma, or AI-driven applications using Spring AI, virtual threads provide the infrastructure to scale efficiently.

For developers, the next steps are clear: upgrade to JDK 21+, audit your code for synchronized blocks wrapping I/O, and start experimenting with Executors.newVirtualThreadPerTaskExecutor(). The era of blocking I/O is back, and it is faster than ever.