The release of Java 21 marked a watershed moment in the history of the language, primarily due to the official arrival of Project Loom’s flagship feature: Virtual Threads. For years, Java news outlets and OpenJDK news feeds have been buzzing with the promise of high-throughput, lightweight concurrency that could render the reactive programming model obsolete for many use cases. As developers migrate their applications to this new paradigm, however, they are discovering that the shift involves more than just swapping out thread pools. There are nuanced technical challenges lurking beneath the surface, particularly concerning thread pinning and locking mechanisms.

In this comprehensive guide, we will explore the current state of Java virtual threads news, dissecting the mechanics of how they interact with traditional synchronization primitives. While Virtual Threads promise to democratize high-scale concurrency, understanding the “gotchas”—specifically the issue where virtual threads get “pinned” to their carrier threads—is essential for avoiding performance regression. Whether you are following Spring Boot news regarding their adoption of virtual threads or monitoring Java performance news for optimization tips, this article provides the technical depth required to master modern Java concurrency.

The Revolution of Project Loom and Virtual Threads

To understand the locking dilemma, we must first revisit the core architecture introduced by Project Loom news. Traditionally, Java threads were wrappers around operating system (OS) threads. This meant a 1:1 relationship: one Java thread equaled one OS thread. While robust, OS threads are expensive resources. They consume significant memory (megabytes for stack space) and require costly context switching by the OS kernel.

Virtual threads decouple the Java thread from the OS thread. The JVM introduces a concept of “carrier threads”—essentially a ForkJoinPool of platform threads that acts as a scheduler. When a virtual thread runs code, it is mounted onto a carrier thread. Crucially, when that virtual thread performs a blocking I/O operation (like fetching data from a database or calling an API), the JVM unmounts it, freeing the carrier thread to execute other virtual threads. This M:N scheduling model allows a standard JVM to handle millions of concurrent tasks with ease.

This architecture is a game-changer for Java ecosystem news, particularly for I/O-heavy applications found in Jakarta EE news and Spring news cycles. However, the mechanism of unmounting is not magic; it requires the JVM to take a snapshot of the stack. This brings us to the critical intersection of virtual threads and synchronization.

Basic Virtual Thread Implementation

Before diving into the complexities of locking, let’s look at how simple it is to spin up virtual threads in modern Java. This ease of use is often highlighted in Java self-taught news resources as a major lowering of the barrier to entry for concurrency.

import java.time.Duration;

import java.util.concurrent.Executors;

import java.util.stream.IntStream;

public class VirtualThreadDemo {

public static void main(String[] args) {

// Example 1: Creating a virtual thread directly

Thread.ofVirtual().name("vt-worker").start(() -> {

System.out.println("Running on: " + Thread.currentThread());

});

// Example 2: Using the new ExecutorService for Virtual Threads

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

IntStream.range(0, 5).forEach(i -> {

executor.submit(() -> {

try {

// Simulate blocking I/O

Thread.sleep(Duration.ofMillis(100));

System.out.println("Task " + i + " completed on " + Thread.currentThread());

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

});

});

} // Executor auto-closes and waits for tasks here

}

}In the code above, the Executors.newVirtualThreadPerTaskExecutor() creates a new virtual thread for every task submitted. Unlike traditional thread pools, you do not pool virtual threads; they are disposable entities intended to be created on demand.

The Hidden Bottleneck: Carrier Thread Pinning

The euphoria surrounding Java 21 news often glosses over a specific limitation known as “pinning.” Pinning occurs when a virtual thread cannot be unmounted from its carrier thread during a blocking operation. If a virtual thread is pinned, it holds onto the OS thread, preventing it from processing other tasks. If enough virtual threads get pinned simultaneously, your application effectively degrades back to the limitations of platform threads, potentially causing thread starvation and massive latency spikes.

The Synchronized Block Problem

The most common cause of pinning is the use of the synchronized keyword. When a virtual thread enters a synchronized method or block, it is pinned to the carrier thread. If the code inside that block performs a blocking operation (like a JDBC call or a network request), the carrier thread is blocked.

This is a significant issue for legacy codebases and libraries, including older versions of Hibernate or JDBC drivers, which is a frequent topic in Hibernate news. The JVM cannot unmount the virtual thread because the synchronized keyword relies on native-frame monitors that the current virtual thread implementation cannot easily yield.

Here is an example of code that causes pinning, which acts as a performance anti-pattern in the world of virtual threads:

import java.util.concurrent.Executors;

public class PinningExample {

// A shared resource requiring synchronization

private int counter = 0;

// BAD PRACTICE for Virtual Threads:

// The 'synchronized' keyword pins the virtual thread to the carrier

public synchronized void heavyWork() {

try {

System.out.println("Start work on: " + Thread.currentThread());

// This blocking operation effectively blocks the OS thread

// because the VT cannot unmount while inside 'synchronized'

Thread.sleep(1000);

counter++;

System.out.println("End work on: " + Thread.currentThread());

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

}

public static void main(String[] args) {

var demo = new PinningExample();

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

for (int i = 0; i < 10; i++) {

executor.submit(demo::heavyWork);

}

}

}

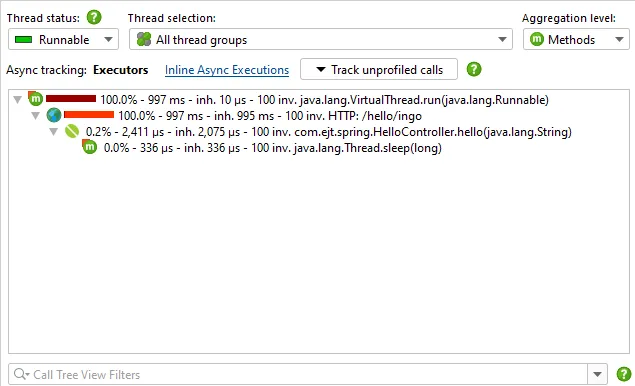

}In the example above, if you were to inspect the execution with a profiler or Java concurrency news tools, you would see that the parallelism is severely limited. The virtual threads are forced to execute sequentially relative to the available carrier threads because they refuse to yield the underlying OS thread while sleeping inside the synchronized block.

Advanced Techniques: Replacing Monitors with Locks

To fully leverage the benefits discussed in Java performance news, developers must adapt their synchronization strategies. The solution to the pinning problem is to replace the intrinsic synchronized lock with java.util.concurrent.locks.ReentrantLock.

The ReentrantLock API is implemented in Java, not native code. Consequently, the JVM's scheduler can recognize when a virtual thread is waiting on a ReentrantLock or blocking while holding one, allowing it to unmount the virtual thread and repurpose the carrier thread. This small refactoring can restore the massive throughput capabilities of your application.

Implementing ReentrantLock for Virtual Threads

Let's refactor the previous example to be "Loom-friendly." This pattern is becoming a standard recommendation in Spring news and Maven news as libraries update their internals.

import java.util.concurrent.Executors;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

public class NonPinningExample {

private int counter = 0;

// Use ReentrantLock instead of synchronized

private final Lock lock = new ReentrantLock();

public void heavyWork() {

lock.lock(); // Acquires the lock

try {

System.out.println("Start work on: " + Thread.currentThread());

// Even though we are sleeping (blocking), the virtual thread

// can unmount because we are not inside a synchronized block.

// The carrier thread is free to do other work!

Thread.sleep(1000);

counter++;

System.out.println("End work on: " + Thread.currentThread());

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

} finally {

lock.unlock(); // CRITICAL: Always unlock in finally block

}

}

public static void main(String[] args) {

var demo = new NonPinningExample();

// This will execute much faster on limited cores compared to the synchronized version

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

for (int i = 0; i < 50; i++) {

executor.submit(demo::heavyWork);

}

}

}

}This shift requires a more disciplined coding style (always using try-finally for unlocking), but it is essential for modern Java development. This approach is also relevant for those following Kotlin news, as Kotlin coroutines have long dealt with similar suspension concepts, though Java achieves this without the suspend keyword.

Diagnostics, Best Practices, and Ecosystem Updates

Adopting virtual threads is not just about changing code; it's about changing how we observe and debug applications. Java security news and JVM news often highlight the importance of visibility into runtime behaviors.

Detecting Pinning

You don't have to guess if your threads are pinning. The OpenJDK provides built-in mechanisms to detect this. You can run your application with the system property -Djdk.tracePinnedThreads=short or -Djdk.tracePinnedThreads=full. This will print a stack trace to standard out whenever a virtual thread blocks while pinned.

Furthermore, Java Flight Recorder (JFR) has been updated in Java 17 news and Java 21 news cycles to include specific events for virtual thread pinning. This is crucial for performance tuning in production environments.

Integrating with Modern Libraries

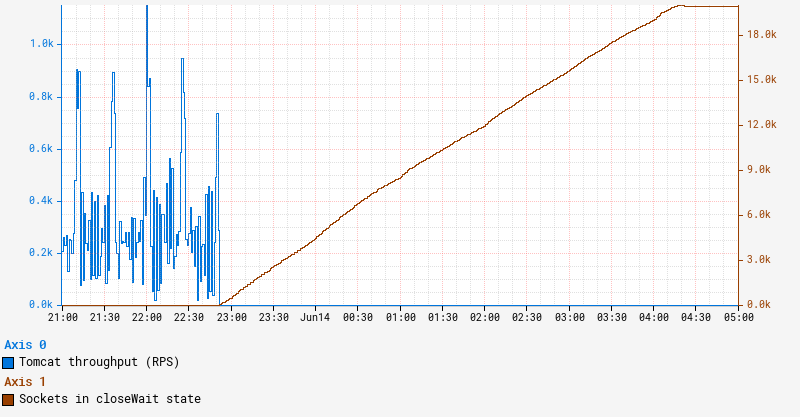

The ecosystem is rapidly adapting. Spring Boot news indicates that version 3.2+ has first-class support for virtual threads, simply by enabling a property. However, developers must be wary of underlying libraries. For instance, if you are using an older JDBC driver or a library that relies heavily on synchronized, enabling virtual threads might degrade performance.

This is where tools like JobRunr news and LangChain4j news become relevant. As these modern libraries for background processing and AI integration evolve, they are being optimized to avoid pinning, ensuring that tasks like calling an LLM API (which is mostly waiting) are perfectly efficient on virtual threads.

Structured Concurrency

While discussing Java virtual threads news, we cannot ignore Java structured concurrency news. Currently a preview feature, structured concurrency aims to simplify multi-threaded code by treating multiple tasks running in different threads as a single unit of work. This pairs perfectly with virtual threads.

import java.util.concurrent.StructuredTaskScope;

import java.util.concurrent.ExecutionException;

import java.util.function.Supplier;

public class StructuredConcurrencyPreview {

// Note: This requires --enable-preview in Java 21+

public static void main(String[] args) {

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

Supplier userTask = scope.fork(() -> fetchUser());

Supplier orderTask = scope.fork(() -> fetchOrders());

scope.join(); // Join both virtual threads

scope.throwIfFailed(); // Propagate errors

System.out.println("Result: " + userTask.get() + ", " + orderTask.get());

} catch (InterruptedException | ExecutionException e) {

e.printStackTrace();

}

}

static String fetchUser() throws InterruptedException {

Thread.sleep(100);

return "UserA";

}

static String fetchOrders() throws InterruptedException {

Thread.sleep(200);

return "Orders[1,2,3]";

}

} This pattern reduces the risk of thread leaks and makes error handling significantly more robust compared to raw CompletableFuture usage.

Conclusion: The Path Forward

The introduction of virtual threads in Java 21 is more than just a feature update; it is a paradigm shift that impacts everything from Java low-code news platforms to high-performance trading systems discussed in Azul Zulu news. The "Dude, where's my lock?" moment happens to many developers when they realize that the old reliable synchronized keyword is now a potential bottleneck.

To succeed in this new era, developers must:

- Prefer

ReentrantLockoversynchronizedfor code running on virtual threads. - Utilize

-Djdk.tracePinnedThreadsduring testing to identify blocking calls in synchronized blocks. - Keep dependencies updated, watching Maven news and Gradle news for library updates that address pinning.

- Embrace the "one thread per request" model again, moving away from complex reactive chains unless absolutely necessary.

Whether you are reading Java wisdom tips news or deep-diving into Project Valhalla news, the trend is clear: Java is becoming more expressive and performant. However, as with any powerful tool, understanding the underlying mechanics—specifically how locks interact with the carrier threads—is the key to unlocking the true potential of the JVM. Some might jokingly call the complexity of modern concurrency Java psyop news, but in reality, it is simply the evolution of a mature language adapting to modern hardware realities.