The Java ecosystem is in a perpetual state of vibrant evolution, consistently delivering powerful new features that redefine how developers build robust, scalable, and intelligent applications. Far from being a legacy platform, Java is at the forefront of innovation, particularly in the realms of concurrency, artificial intelligence, and low-level JVM optimizations. Recent developments in OpenJDK, the Spring framework, and the broader enterprise landscape signal a clear direction: making Java more efficient, more developer-friendly, and more capable than ever before.

This article explores the latest Java news, moving beyond headlines to provide a deep technical analysis of the features that are shaping the future of the platform. We will dissect the revolutionary potential of Structured Concurrency from Project Loom, explore how Spring AI is democratizing AI for Java developers, and look ahead at promising JVM enhancements like Lazy Constants. Whether you’re working with Java 17, have adopted the latest LTS release in Java 21, or are keenly watching future developments, this guide will provide practical code examples and actionable insights to keep you ahead of the curve in the dynamic world of Java.

Section 1: Taming Chaos with Structured Concurrency

For years, managing concurrent tasks in Java has been a double-edged sword. While powerful, traditional approaches using raw threads or even `ExecutorService` can lead to complex, error-prone code. Common pitfalls include thread leaks, orphaned tasks, and convoluted cancellation logic. The latest Java concurrency news from Project Loom introduces a paradigm-shifting solution: Structured Concurrency.

The Problem with Unstructured Concurrency

In a traditional multithreaded application, when a method spawns several threads to perform subtasks, the parent method has no structural link to its children. If the parent method’s thread is cancelled or fails, the child threads can continue running in the background, consuming resources and potentially causing unpredictable behavior. Error handling is also complex; developers must manually aggregate exceptions from all forked threads, often using cumbersome tools like `CompletableFuture.allOf()`.

The Structured Approach: Concurrency as a Single Unit

Structured Concurrency, now in its sixth preview, applies the principles of structured programming to concurrent execution. It treats a group of related, concurrent tasks as a single, atomic unit of work. This is managed within a lexical scope, typically a `try-with-resources` block. The core principles are:

- Clear Lifetime: All tasks forked within a scope must complete before the main flow of execution can exit the scope.

- Coordinated Shutdown: If any subtask fails, all other sibling tasks within the scope are automatically cancelled.

- Simplified Error Handling: Exceptions from failed tasks are cleanly propagated to the parent scope, making error handling as straightforward as a standard `try-catch` block.

This is made incredibly efficient by Java virtual threads, another key feature from Project Loom, which allows for the creation of millions of lightweight threads with minimal overhead. The latest OpenJDK news confirms that these features are maturing rapidly, bringing us closer to a new standard for concurrent programming.

Practical Example: Concurrent Microservice Calls

Imagine a service that needs to build a user dashboard by fetching user profile data and their recent order history from two separate microservices. Using `StructuredTaskScope`, we can perform these network calls concurrently and reliably.

import java.time.Duration;

import java.util.concurrent.Future;

import java.util.concurrent.StructuredTaskScope;

// A simple record to represent user profile data

record UserProfile(String userId, String name, String email) {}

// A simple record to represent order history

record OrderHistory(String userId, int orderCount) {}

// The combined dashboard data

record UserDashboard(UserProfile profile, OrderHistory history) {}

public class DashboardService {

// Simulates a network call to a user service

private UserProfile fetchUserProfile(String userId) throws InterruptedException {

System.out.println("Fetching profile for user: " + userId);

Thread.sleep(Duration.ofMillis(150)); // Simulate network latency

return new UserProfile(userId, "Jane Doe", "jane.doe@example.com");

}

// Simulates a network call to an order service

private OrderHistory fetchOrderHistory(String userId) throws InterruptedException {

System.out.println("Fetching order history for user: " + userId);

Thread.sleep(Duration.ofMillis(200)); // Simulate network latency

// Uncomment the line below to simulate a failure

// throw new RuntimeException("Order service is down!");

return new OrderHistory(userId, 42);

}

public UserDashboard getDashboardData(String userId) throws Exception {

// The try-with-resources block defines the scope of the concurrent operations.

try (var scope = new StructuredTaskScope.ShutdownOnFailure()) {

// 1. Fork: Start the concurrent tasks.

// These run on virtual threads, making them lightweight.

Future<UserProfile> profileFuture = scope.fork(() -> fetchUserProfile(userId));

Future<OrderHistory> historyFuture = scope.fork(() -> fetchOrderHistory(userId));

// 2. Join: Wait for both tasks to complete.

// This call will block until both forks are done or one fails.

scope.join();

// 3. Handle Failure: If any task threw an exception, this will re-throw it.

// The scope ensures that if one failed, the other is cancelled.

scope.throwIfFailed();

// 4. Success: If we reach here, both tasks completed successfully.

// We can now safely get the results.

UserProfile profile = profileFuture.resultNow();

OrderHistory history = historyFuture.resultNow();

return new UserDashboard(profile, history);

}

}

public static void main(String[] args) {

// This requires running with --enable-preview flag

DashboardService service = new DashboardService();

try {

UserDashboard dashboard = service.getDashboardData("user-123");

System.out.println("Successfully retrieved dashboard: " + dashboard);

} catch (Exception e) {

System.err.println("Failed to retrieve dashboard data: " + e.getMessage());

}

}

}In this example, the `ShutdownOnFailure` policy ensures that if either `fetchUserProfile` or `fetchOrderHistory` fails, the other task is immediately cancelled, and the `join()` method completes. The `throwIfFailed()` call then propagates the original exception, making the entire concurrent operation behave like a single, cohesive unit.

Section 2: Java Embraces Intelligence with Spring AI

The rise of Large Language Models (LLMs) has transformed software development, and the Java ecosystem is rapidly adapting. The most significant Spring news in this area is the emergence of the Spring AI project. Its goal is to apply the core principles of the Spring Framework—abstraction and dependency injection—to the world of generative AI, making it incredibly simple for Java developers to build AI-powered features.

Simplifying AI Integration

Before Spring AI, integrating with models from providers like OpenAI, Google, or Hugging Face required developers to write bespoke client code, handle complex JSON parsing, and manage API keys manually. Spring AI provides a unified, high-level API that abstracts these details away. This is a game-changer, especially for developers working with Spring Boot, as it offers auto-configuration and a familiar programming model.

The project provides core interfaces like `ChatClient` for conversational AI and `EmbeddingClient` for vectorizing text. This allows developers to write code against a stable interface while easily swapping out the underlying AI model provider through configuration. This is fantastic Spring AI news for teams who want to avoid vendor lock-in and experiment with different models.

Practical Example: A Simple AI-Powered Translation Service

Let’s build a simple Spring Boot REST controller that uses Spring AI to translate a piece of text into a specified language. This demonstrates the power and simplicity of the `ChatClient` interface.

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.chat.prompt.PromptTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import java.util.Map;

@RestController

public class TranslationController {

private final ChatClient chatClient;

// The ChatClient is auto-configured by Spring Boot and injected here.

public TranslationController(ChatClient.Builder chatClientBuilder) {

// Assuming you have spring.ai.openai.api-key set in your application.properties

this.chatClient = chatClientBuilder.build();

}

@GetMapping("/translate")

public Map<String, String> translate(

@RequestParam(defaultValue = "Hello, world!") String text,

@RequestParam(defaultValue = "French") String language) {

String message = """

Translate the following text into {language}.

Only provide the translation, with no additional explanation or commentary.

Text: {text}

""";

PromptTemplate promptTemplate = new PromptTemplate(message);

Prompt prompt = promptTemplate.create(Map.of("text", text, "language", language));

// The core Spring AI call: simple, clean, and declarative.

String translation = chatClient.prompt(prompt)

.call()

.content();

return Map.of("original", text, "language", language, "translation", translation);

}

}

/*

// --- pom.xml dependencies ---

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>

</dependencies>

<repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

</repository>

</repositories>

// --- application.properties ---

// spring.ai.openai.api-key=YOUR_API_KEY_HERE

*/This code is remarkably clean. The controller is completely decoupled from the specifics of the OpenAI API. We could switch to Google’s Gemini or another provider simply by changing the Maven dependency and configuration properties, with zero changes to the Java code. This level of abstraction is what makes the Spring ecosystem so powerful and is a promising sign for the future of AI in Java. This trend is also reflected in the growth of other libraries like LangChain4j, which provide similar capabilities for the broader Java community.

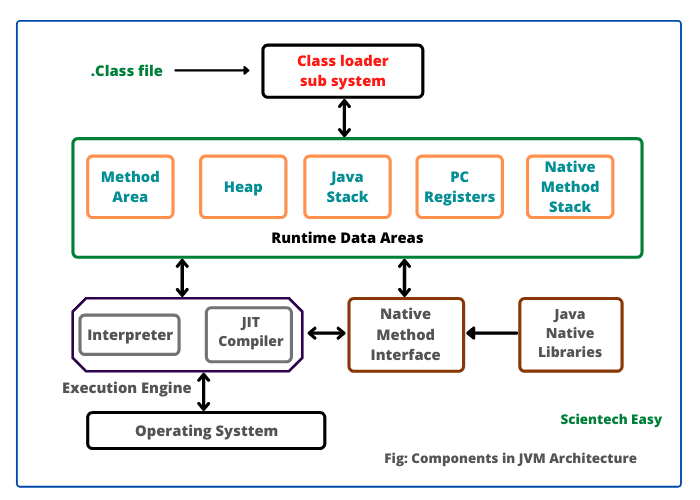

Section 3: Advanced JVM Optimizations on the Horizon

While high-level frameworks and concurrency models are exciting, the foundation of Java’s strength lies in the continuous improvement of the JVM itself. The OpenJDK news pipeline is always filled with JEPs (JDK Enhancement Proposals) aimed at improving performance, reducing memory overhead, and making the platform more efficient. One such proposal currently in a preview state is “Lazy Constants.”

The Cost of Eager Initialization

In Java, `static final` fields are initialized eagerly when their containing class is loaded. For simple constants like `public static final int MAX_RETRIES = 3;`, this is perfectly fine. However, when a constant is expensive to compute—requiring file I/O, complex calculations, or network access—this eager initialization can slow down application startup and consume memory, even if the constant is never used. Developers currently resort to workarounds like the initialization-on-demand holder idiom to achieve laziness.

A Cleaner Future with Lazy Constants

The Lazy Constants JEP aims to provide a first-class, low-overhead mechanism for deferring the initialization of a `static final` field until its first access. While the final syntax is still under discussion, the goal is to make this process declarative and seamless.

Illustrating the Concept: Before and After

Let’s look at how we currently achieve lazy initialization for a complex object and how a future JVM feature might simplify it.

import java.util.stream.IntStream;

public class ConstantExamples {

// --- BEFORE: The Initialization-on-Demand Holder Idiom ---

// This is the current best practice for lazy, thread-safe static initialization.

private static class BigComputationHolder {

// This line is only executed when BigComputationHolder is first accessed.

static final int[] EXPENSIVE_ARRAY = computeExpensiveArray();

}

public static int[] getExpensiveArray() {

return BigComputationHolder.EXPENSIVE_ARRAY;

}

private static int[] computeExpensiveArray() {

System.out.println("Performing expensive computation now...");

// Simulate a costly operation

return IntStream.range(0, 10_000_000).filter(n -> n % 2 == 0).toArray();

}

// --- AFTER: Conceptual Example of Lazy Constants (Syntax is speculative) ---

// The JEP aims to replace the holder pattern with a more direct mechanism.

// The actual implementation will likely involve MethodHandles and ConstantDynamic,

// but the developer experience could be simplified to something like this concept.

/*

public static lazy final int[] LAZY_EXPENSIVE_ARRAY = computeExpensiveArray();

*/

public static void main(String[] args) {

System.out.println("Main method started. Constant has not been initialized yet.");

// Nothing is printed from computeExpensiveArray() at this point.

System.out.println("First access to the constant...");

int length = getExpensiveArray().length; // The holder class is loaded, and the array is computed here.

System.out.println("Array length: " + length);

System.out.println("Second access to the constant...");

int anotherLength = getExpensiveArray().length; // The computation is NOT run again.

System.out.println("Array length: " + anotherLength);

}

}The “before” example is effective but verbose. The “after” concept illustrates the goal: to make lazy initialization a native feature of the platform. This would lead to cleaner code, faster startup times for applications with many complex constants, and better overall Java performance news. This is just one example of the deep work being done in projects like Project Valhalla (value types) and Project Panama (better native interoperability) to ensure the JVM remains a world-class runtime.

Section 4: Best Practices and the Broader Ecosystem

These exciting developments don’t exist in a vacuum. They are part of a rich and interconnected Java ecosystem news landscape. To leverage these new features effectively, developers must adopt modern best practices and stay current with their tooling.

Adopting Preview Features Safely

Features like Structured Concurrency are introduced as “preview features.” This is a crucial part of the modern Java release cadence, allowing the community to provide feedback before a feature is finalized.

- Enable Explicitly: To use a preview feature, you must enable it with flags like `–enable-preview` during both compilation and runtime.

- Not for Production: Preview APIs are subject to change or removal in future releases. They are intended for experimentation and feedback, not for production deployments.

- Stay Updated: Follow the official OpenJDK mailing lists and Java news outlets to track the progress of JEPs as they move from preview to standard features.

The Importance of Tooling and Enterprise Frameworks

The entire toolchain, from build tools to testing libraries, evolves alongside the language. Keeping your Maven news and Gradle news feeds active is essential to ensure you have plugin versions that support new language features and JDKs. Similarly, testing frameworks like JUnit and Mockito are updated to work seamlessly with new constructs, which is vital JUnit news for maintaining code quality.

In the enterprise space, this innovation is rapidly adopted. The latest Jakarta EE news shows that application servers like Open Liberty and frameworks like Quarkus are quick to integrate features like virtual threads to offer their users massive scalability improvements out of the box. The choice of JDK distribution—whether it’s Oracle Java, Adoptium Temurin, Azul Zulu, or Amazon Corretto—also plays a role, though the core OpenJDK innovations are available across all of them.

Conclusion: The Future of Java is Bright and Dynamic

The latest wave of Java news paints a clear picture of a platform that is not only mature and stable but also aggressively innovating. Structured Concurrency is set to fundamentally improve how we write and reason about concurrent code, eliminating entire classes of bugs. The rapid development of Spring AI and related libraries is positioning Java as a first-class citizen in the age of artificial intelligence, empowering millions of developers to build smarter applications.

Meanwhile, deep-level JVM enhancements continue to push the boundaries of performance and efficiency, ensuring that Java remains the optimal choice for demanding, large-scale systems. For developers, the path forward is clear: embrace the new features, experiment with the previews, and stay engaged with the vibrant ecosystem. The journey of Java is far from over; in many ways, it’s just getting started.