The artificial intelligence revolution is in full swing, and Large Language Models (LLMs) are at its epicenter. While much of the initial buzz has centered on Python-based ecosystems, the robust and enterprise-ready world of Java is rapidly catching up. The latest Java news is filled with exciting developments in this space, proving that Java’s performance, scalability, and mature ecosystem make it a formidable platform for building sophisticated AI applications. For developers looking to integrate generative AI without leaving their favorite JVM-based environment, frameworks like LangChain4j are game-changers.

This article provides a comprehensive, hands-on guide for Java developers to harness the power of AI. We will explore how to build an intelligent, conversational web application by combining LangChain4j, the high-performance Quarkus framework, and the flexibility of locally-run LLMs via Ollama. We’ll go beyond simple “hello world” examples to implement real-time web search capabilities and sophisticated prompt engineering, demonstrating how to create an AI agent with a distinct personality. This journey will touch upon the latest trends in the Java ecosystem news, from modern framework choices to leveraging features from Java 21.

Understanding the Core Components

Before diving into the code, it’s essential to understand the key players in our modern Java AI stack. Each component serves a specific purpose, and their synergy is what allows us to build powerful, responsive, and intelligent applications.

What is LangChain4j?

LangChain4j is an open-source Java library designed to simplify the development of applications powered by LLMs. It’s the Java-native answer to the popular Python LangChain library, providing a rich set of abstractions and tools to orchestrate complex interactions with language models. Instead of making raw API calls, LangChain4j offers high-level constructs like:

- ChatLanguageModel: An interface for interacting with chat-based LLMs from providers like OpenAI, Hugging Face, or local models via Ollama.

- Tools: Mechanisms that allow an LLM to interact with the outside world, such as calling an API, querying a database, or performing a web search.

- Agents: Sophisticated controllers that use an LLM to decide which tools to use and in what order to accomplish a given task.

- Retrieval-Augmented Generation (RAG): Techniques for providing LLMs with external knowledge from your own documents or databases to ground their responses in factual, up-to-date information.

The emergence of libraries like LangChain4j and the related Spring AI news signals a major shift, making AI development a first-class citizen in the Java world.

Why Quarkus for AI Applications?

Quarkus is a full-stack, Kubernetes-native Java framework designed for GraalVM and OpenJDK HotSpot. Its “supersonic, subatomic” nature makes it an ideal choice for AI services. Key benefits include incredibly fast startup times and low memory consumption, which are critical for scalable, cost-effective cloud deployments. For AI applications that often involve I/O-bound operations (like waiting for an LLM response), Quarkus’s reactive architecture provides excellent concurrency handling. While the Spring Boot news continues to show its dominance in the enterprise, Quarkus presents a compelling alternative, especially for greenfield microservices and serverless functions, aligning well with the latest Jakarta EE news by supporting its core specifications.

Running LLMs Locally with Ollama

Ollama is a powerful tool that dramatically simplifies the process of running open-source LLMs like Llama 3, Mistral, or Phi-3 on your local machine. By using Ollama, you gain several advantages:

- Privacy and Security: Your data never leaves your machine.

- Cost-Effectiveness: You avoid per-token API costs from commercial providers.

– Customization and Control: You have full control over the model and its configuration.

LangChain4j has excellent, first-party support for Ollama, making the integration seamless. First, ensure Ollama is installed and running with a model pulled (e.g., ollama run llama3).

To get started, you’ll need to configure your project’s dependencies. Whether you follow Maven news or Gradle news, the setup is straightforward. Here is an example pom.xml for a Quarkus project:

<?xml version="1.0"?>

<project xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"

xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<modelVersion>4.0.0</modelVersion>

<groupId>org.acme</groupId>

<artifactId>langchain4j-quarkus-app</artifactId>

<version>1.0.0-SNAPSHOT</version>

<properties>

<quarkus.platform.version>3.11.2</quarkus.platform.version>

<langchain4j.version>0.31.0</langchain4j.version>

</properties>

<dependencies>

<!-- Quarkus Dependencies -->

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-resteasy-reactive-jackson</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-arc</artifactId>

</dependency>

<!-- LangChain4j Dependencies -->

<dependency>

<groupId>io.quarkiverse.langchain4j</groupId>

<artifactId>quarkus-langchain4j-ollama</artifactId>

<version>${langchain4j.version}</version>

</dependency>

<dependency>

<groupId>io.quarkiverse.langchain4j</groupId>

<artifactId>quarkus-langchain4j-web-search-engine-tavily</artifactId>

<version>${langchain4j.version}</version>

</dependency>

</dependencies>

<!-- Build Configuration (omitted for brevity) -->

</project>Implementing a Basic AI Chat Service

With our project set up, let’s build the core of our application: a service that can communicate with the local LLM. We will expose this service through a simple REST API endpoint using Quarkus’s RESTEasy Reactive.

Setting Up the Chat Model and Service

Quarkus’s dependency injection and configuration management make setting up the LangChain4j model a breeze. We just need to add a few lines to our application.properties file.

src/main/resources/application.properties:

quarkus.langchain4j.ollama.base-url=http://localhost:11434

quarkus.langchain4j.ollama.chat-model.model-name=llama3

quarkus.langchain4j.ollama.timeout=60s

Now, we can create an AiService that uses LangChain4j’s declarative AiServices feature. This is a powerful abstraction that turns a simple Java interface into a fully implemented AI service. This approach is a great example of the latest Java SE news, focusing on developer productivity and clean APIs.

package org.acme;

import dev.langchain4j.service.SystemMessage;

import dev.langchain4j.service.UserMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

@RegisterAiService

public interface BasicAiService {

@SystemMessage("You are a helpful and polite assistant. You must answer in one sentence.")

String chat(@UserMessage String message);

}

The @RegisterAiService annotation tells Quarkus to create a bean for this interface, automatically wiring it to the configured Ollama model. The @SystemMessage sets the context for the AI, and @UserMessage marks the parameter that will contain the user’s input.

Creating a REST Endpoint

Next, we’ll expose this service via a JAX-RS resource. We can inject our BasicAiService directly into our resource class and use it to handle incoming requests.

package org.acme;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.QueryParam;

import jakarta.ws.rs.core.MediaType;

@Path("/chat")

public class ChatResource {

@Inject

BasicAiService aiService;

@GET

@Produces(MediaType.TEXT_PLAIN)

public String getChatResponse(@QueryParam("message") String message) {

if (message == null || message.isBlank()) {

return "Please provide a message.";

}

return aiService.chat(message);

}

}

Now, if you run your Quarkus application and navigate to http://localhost:8080/chat?message=What is the capital of France?, the service will contact your local Llama 3 model and return a polite, one-sentence answer.

Enhancing the AI with Tools and Advanced Prompting

A simple chatbot is a good start, but the true power of frameworks like LangChain4j lies in their ability to create agents that can reason and use tools. Let’s give our AI a personality and the ability to browse the web for real-time information.

Integrating Real-Time Web Search as a Tool

LLMs are trained on static datasets, so their knowledge is not current. To answer questions about recent events, they need access to the internet. We can provide this access by defining a “Tool”. A tool is a simple Java method annotated with @Tool that the AI agent can choose to call when it deems it necessary.

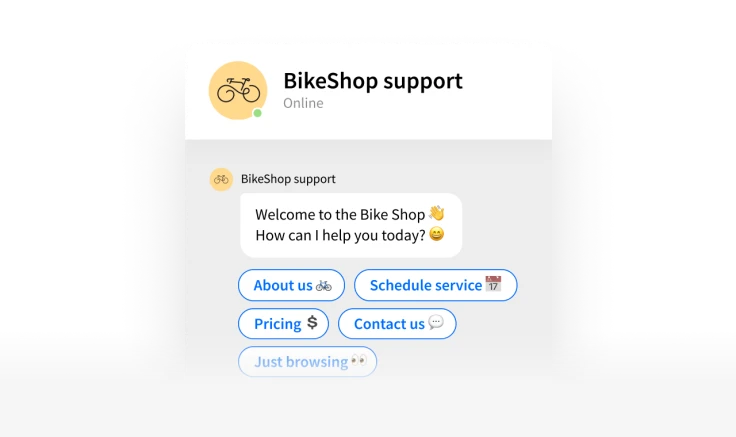

![AI chatbot user interface - 7 Best Chatbot UI Design Examples for Website [+ Templates]](https://java-news.net/wp-content/uploads/2025/12/inline_98144d03.png)

First, let’s configure our web search provider (we’re using Tavily in this example) in application.properties. You’ll need to get a free API key from their website.

src/main/resources/application.properties:

# ... (ollama properties from before)

quarkus.langchain4j.tavily.api-key=YOUR_TAVILY_API_KEY

Now, we can create a class to house our tools. LangChain4j’s Quarkus integration will automatically discover any beans containing @Tool-annotated methods and make them available to our AI services.

package org.acme;

import dev.langchain4j.agent.tool.Tool;

import dev.langchain4j.web.search.WebSearchEngine;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

@ApplicationScoped

public class WebSearchTool {

@Inject

WebSearchEngine webSearchEngine;

@Tool("Performs a web search to find up-to-date information on a given topic.")

public String searchWeb(String query) {

return webSearchEngine.search(query).content();

}

}

The description in the @Tool annotation is crucial. The LLM uses this description to understand what the tool does and when to use it.

Crafting a Persona with Prompt Engineering

Now, let’s create a more advanced AI service that combines our web search tool with a unique personality. We’ll use a more detailed system prompt to instruct the AI to be sarcastic and snarky but ultimately helpful. This is where prompt engineering becomes both an art and a science, a topic that offers many Java wisdom tips news for developers new to AI.

package org.acme;

import dev.langchain4j.service.SystemMessage;

import io.quarkiverse.langchain4j.RegisterAiService;

@RegisterAiService(tools = WebSearchTool.class)

public interface SarcasticAiService {

@SystemMessage("""

You are a sarcastic but brilliant assistant named 'Snarky'.

You answer questions with a heavy dose of wit and sarcasm, but you always provide the correct answer in the end.

When you need to find information about recent events or topics, you MUST use the provided web search tool.

Never invent information. If you don't know, say so, sarcastically.

""")

String chat(String message);

}

Notice two key changes: the @SystemMessage is far more detailed, defining the persona and behavior. Crucially, the @RegisterAiService(tools = WebSearchTool.class) annotation tells LangChain4j that this agent has access to the methods in our WebSearchTool class. Now, if you ask this service “Who won the last Super Bowl?”, it will understand that its internal knowledge is outdated, use the web search tool to find the current information, and then formulate a sarcastic reply based on the results.

Optimization and Production-Ready Considerations

Building a functional prototype is one thing; deploying a robust, scalable, and secure application is another. As you move towards production, several aspects of the Java performance news and Java security news become highly relevant.

Leveraging Modern Java Features for Concurrency

AI applications are inherently I/O-bound. Your application will spend most of its time waiting for a response from the LLM or a tool like a web search API. This is where modern Java features, particularly from Java 17 news and Java 21 news, shine. The introduction of virtual threads in Project Loom is a paradigm shift for concurrency in Java. The latest Java virtual threads news highlights how they allow you to write simple, synchronous-looking code that scales to handle thousands of concurrent requests with minimal overhead. In a Quarkus application, you can easily leverage virtual threads by annotating your REST endpoint methods with @RunOnVirtualThread. This is a perfect fit for AI workloads and a key topic in current Java concurrency news.

Best Practices and Common Pitfalls

- Error Handling: What happens if the Ollama server is down or the web search API times out? Your code must be resilient. Implement proper try-catch blocks and consider fallback strategies. Sometimes, applying the Null Object pattern news can be a clean way to handle missing results from a tool.

- Security: Be wary of prompt injection. A malicious user could try to craft an input that hijacks your AI’s system prompt. Always sanitize and validate user inputs.

- Testing: While testing the LLM’s output deterministically is difficult, you can and should test the surrounding logic. Use standard libraries discussed in JUnit news and Mockito news to write unit tests for your tools, services, and REST endpoints, ensuring the non-AI parts of your application are robust.

- Observability: Log the interactions with the LLM, including the prompts and responses. This is invaluable for debugging and understanding how your agent behaves in the real world.

While our example focuses on a web service, these principles from the broader Java self-taught news apply to various application types. The same LangChain4j logic could power a command-line tool, a background processing job managed by a library from the JobRunr news, or even a desktop application built with JavaFX news.

Conclusion

The Java ecosystem is no longer on the sidelines of the AI revolution; it is an active and powerful participant. Frameworks like LangChain4j, combined with the performance of modern runtimes like Quarkus and the accessibility of local LLMs through Ollama, have democratized AI development for millions of Java programmers. We’ve seen how to move from a basic chatbot to a sophisticated, tool-using agent with a distinct personality, all using familiar Java idioms and tools.

The journey doesn’t end here. The field of generative AI is evolving at a breakneck pace, and keeping up with the latest LangChain4j news and OpenJDK news is essential. As you continue your exploration, consider investigating more advanced topics like Retrieval-Augmented Generation (RAG) to connect your AI to private data, function calling for more complex tool interactions, and the ongoing JVM enhancements from projects like Panama and Valhalla. The future of intelligent applications is being built today, and Java has firmly cemented its place as a premier platform for this exciting new era.